Algorithms and Spectral Graph Theory

Table of Contents

- 1. About these notes

- 2. Linear Algebra notation & review

- 3. Matrices associated to graphs and their spectra

- 4. Cheeger's Inequality

- 5. Random walks

- 6. Electric networks

- 7. Maximum Cut and the Last Eigenvalue

- 8. Spectral Clustering

- 8.1. Introduction

- 8.2. Notations

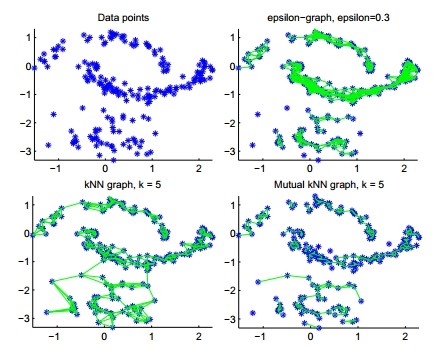

- 8.3. Different ways to construct the similarity graph

- 8.4. Review of graph Laplacians

- 8.5. Spectral clustering algorithms

- 8.6. Graph cut point of view

- 8.7. Perturbation theory point of view

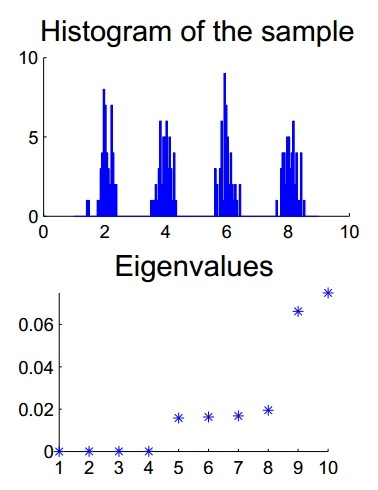

- 8.8. Justification by a slightly modified spectral algorithm

- 8.9. Practical details and issues

- 8.10. Conclusion

- 9. Bipartite Ramanujan Graphs and Interlacing Families

- 10. Improved Cheeger's Inequality

- 11. An Almost-Linear-Time Algorithm for Approximate Max Flow in Undirected Graphs, and its Multicommodity Generalizations

- 11.1. Resources

- 11.2. Problem definition and main result

- 11.3. Overview of the chapter

- 11.4. The Gradient Descent Method for general norms

- 11.5. Approximate Algorithm for the Maximum Flow Problem

- 11.5.1. Reformulation as Minimum Congestion Flow problem

- 11.5.2. Reformulation as a circulation problem

- 11.5.3. Reformulation as an unconstrained problem

- 11.5.4. Approximating \(\|\cdot\|_\infty\) by a smooth function

- 11.5.5. Solving the Maximum Flow Problem using the Gradient Descent Method

- 11.5.6. Constructing a projection matrix

\(\newcommand{\deff}{\mathrel{\mathop:}=}\newcommand{\rank}{\text{rank}}\newcommand{\trace}{\text{trace}}\newcommand{\onesv}{\mathbb{1}}\newcommand{\zerov}{\mathbb{0}}\newcommand{\E}{\mathbb{E}}\newcommand{\Span}{\text{span}}\newcommand{\R}{\mathbb{R}}\)

\(\newcommand{\extCur}{f^{\text{ext}}}\newcommand{\effResist}{R^{\text{eff}}}\)

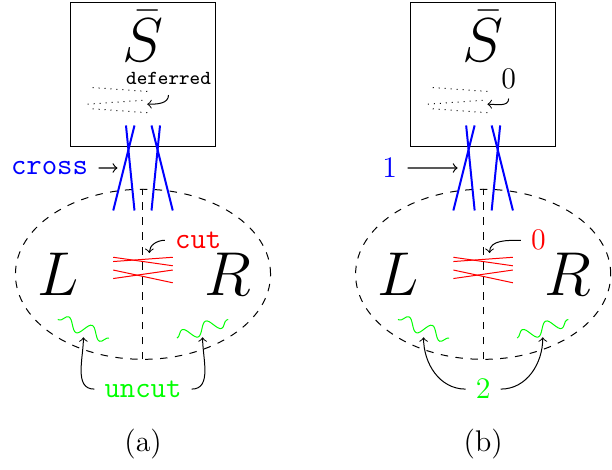

\(\newcommand{\Cut}{\mathtt{cut}}\newcommand{\Uncut}{\mathtt{uncut}}\newcommand{\Deferred}{\mathtt{deferred}}\newcommand{\Cross}{\mathtt{cross}}\)

\(\newcommand{\norm}[1]{\|{#1}\|}\newcommand{\Norm}[1]{\left\|{#1}\right\|}\)

\(\newcommand{\sym}{\mathrm{sym}}\newcommand{\sgn}{\mathrm{sgn}}\newcommand{\fix}{\mathrm{fix}}\)

1 About these notes

These lecture notes are from course CO759 (Algorithms and Spectral Graph Theory) offered in Summer 2014. They are mostly based on lecture notes by Dan Spielman and/or Lap Chi Lau. The notes and illustrations where mostly prepared by Fidel Barrera-Cruz. Chapters 8, 9 and 10 were written by Hangmeng, Miaolan and Mehdi respectively who were graduate students taking the course.

These notes are released under the terms of the GNU Free Documentation License.

2 Linear Algebra notation & review

2.1 Some general notation

\([n]=\{1,\dots,n\}\), where \(n\) is a positive integer.

matrix \(A \in \mathbb{R}^{n\times{n}}\), column vector \(x\in \mathbb{R}^n\), then row vector is \(x^T\)

\(a_{i,j}\) : \((i,j)\)-th entry of \(A, \quad A_j\) : \(j\)-th column of \(A\),

\(\quad \widetilde{A}_{i,j}\) : matrix obtained from \(A\) by deleting \(i\)-th row and \(j\)-th column

2.2 Linear independence, span, basis, dimension, rank, \(\ldots\)

Linear (in)dependence: set of vectors \(\{ v_1,\dots,v_k \}\) is linearly independent if

\begin{equation} \nonumber c_1v_1+\dots+c_kv_k=0 \quad \Longrightarrow \quad c_i=0,\forall i \end{equation}otherwise the set is called linearly dependent.

Span of a set of vectors \(S\), denoted \(\text{span}(S)\), is the set of all linear combinations of the vectors in \(S\). If \(\text{span}(S)=\mathbb{R}^n\), then \(S\) is said to span \(\mathbb{R}^n\).

Basis of \(\mathbb{R}^n\) (or, of a vector space \(V\)) is a linearly independent set of vectors that spans \(\mathbb{R}^n\) (or, \(V\)).

Dimension of a vector space \(V\), denoted \(\text{dim}(V)\), is the size of a basis of \(V\).

For subspaces \(V_1\) and \(V_2\) (of a vector space \(V\)), the sum is \(\{ v_1 + v_2 : v_1\in V_1, v_2\in V_2 \}\) and it is denoted \(V_1 + V_2\). The intersection \(V_1\cap V_2 = \{ v : v\in V_1, v\in V_2 \}\) is a subspace, and we have \(V_1\cap V_2 \subseteq V_i \subseteq V_1+V_2,~i=1,2\).

(Dimension identity): Let \(V\) be a vector space (finite dimensional), and let \(V_1,V_2\) be subspaces of \(V\). Then

\begin{equation} \nonumber \text{dim}(V_1) + \text{dim}(V_2) = \text{dim} (V_1\cap V_2) + \text{dim} (V_1 + V_2). \end{equation}Nullspace (kernel) of \(A\): \(\text{nullsp}(A) = \{ x\in\mathbb{R}^n : Ax=0 \}\), and \(\text{null}(A)\) denotes the dimension of \(\text{nullsp}(A)\).

Range (image) of \(A\): \(\text{range}(A) = \{ Ax : x\in\mathbb{R}^n \}\), and its dimension is denoted \(\text{rank}(A)\); thus, \(\text{rank}(A) = \max\) no. of linearly independent columns of \(A\).

\begin{equation} \nonumber \text{rank}(A) + \text{null}(A) = n \end{equation}2.3 Determinant

\(\det(A) = \sum_{j=1}^n (-1)^{i+j} a_{i,j} \det( \widetilde{A}_{i,j} )\)

\(\det(A) = \sum_{\pi\in S^n} \text{sign}(\pi) \prod_{i=1}^n a_{i,\pi(i)}\), where \(\pi: [n] \rightarrow [n]\) is a permutation of \([n]\) (= index set of \(A\)), and \(\text{sign}(\pi) = (-1)^{\text{inv}(\pi)}\), where \(\text{inv}(\pi)\) denotes the number of inversions of \(\pi\), which is, \(\{(i,j):i\lt j\text{ and }\pi(i)>\pi(j)\}\)

\begin{align} \nonumber \det(A) \not=0 \quad &\text{ iff } \text{rank}(A)=n \notag \\ \det(A B) = \quad &\det(A) ~ \det(B) \notag \\ \text{rank}(A)=n \quad &\quad \Longrightarrow \quad A \text{ can be written as a product of elementary matrices} \end{align}2.4 Eigenvalues & eigenvectors

A nonzero-vector \(x\in\mathbb{R}^n\) is called an eigenvector of \(A\) if there exists a number \(\lambda\) s.t. \(Ax = \lambda x\) holds. We call \(\lambda\) an eigenvalue of \(A\).

\begin{equation} \nonumber Ax=\lambda x \text{ iff } (A-\lambda{I})x=0 \text{ iff } \text{null}(A-\lambda{I})\not= 0 \text{ iff } \text{rank}(A-\lambda{I})\lt n \text{ iff } \det(A-\lambda{I})=0 \end{equation}\(\det(A-\lambda{I})\) is a polynomial of \(\lambda\) of degree \(n\), called the characteristic polynomial of \(A\); each root is an eigenvalue. Thus, \(\det(A-\lambda{I}) = \prod_{i=1}^n (\lambda - \lambda_i)\), where \(\lambda_1,\dots,\lambda_n\) are the roots of the characteristic polynomial; so \(\lambda_1,\dots,\lambda_n\) are the eigenvalues of \(A\). If one of these roots \(\lambda_i\) appears \(k\) times, then we say that \(\lambda_i\) is an eigenvalue of multiplicity \(k\).

For a fixed eigenvalue \(\lambda\), any non-zero vector in \(\text{nullsp}(A-\lambda{I})\) is an eigenvector.

All real symmetric matrices have real eigenvalues (follows from Spectral theorem).

Geometric view: an eigenvector is a direction that is fixed (up to scaling) by the linear transform defined by \(A\)

Eigenspace: for an eigenvalue \(\lambda\), we call \(\text{nullsp}(A-\lambda{I})\) the eigenspace of \(\lambda\). We have: \(\text{null}(A-\lambda{I})\) is the geometric multiplicity of \(\lambda\).

2.5 Inner products, norm, orthonormal basis

Inner product of vectors \(u, v \in \mathbb{R}^n\), namely, \(\sum_{j=1}^n u_j v_j\) is denoted by \(\langle u,v\rangle\) (or by \(u\cdot{v}\))

Norm (or, length) of a vector \(v\) is \(\Vert v\Vert = \sqrt{\langle v,v\rangle}\)

Orthogonal (or, perpendicular) vectors \(u,v\): \(\langle u,v\rangle=0\)

Set of vectors \(\{v_1,\dots,v_n\}\) is orthogonal if \(\langle v_i,v_j\rangle=0,~ \forall i\neq{j}\)

Set of vectors \(\{v_1,\dots,v_n\}\) is orthonormal if it is orthogonal, and \(\Vert v_i\Vert=1, \forall i\)

Given any basis, we can construct an orthogonal basis by the Gram-Schmidt procedure: Given a basis \(B=\{w_1,\dots,w_k\}\), define the orthogonal basis \(B' = \{v_1,\dots,v_k\}\) by \(v_k = w_k - \sum_{j=1}^k \frac{ \langle w_k,v_j\rangle }{\Vert v_j\Vert} \; v_j,~\forall k: 2\le k\le n\) (and, \(v_1=w_1\)).

Then \(B''=\{ v_1/\Vert v_1\Vert, \dots, v_n/\Vert v_n\Vert \}\) is an orthonormal basis.

If \(B\) is a matrix whose columns form an orthonormal basis, then \(B^T B = I\) and hence the inverse of \(B\), denoted \(B^{-1}\), is equal to \(B^T\).

2.5.1 Cauchy-Schwarz Inequality

For vectors \(v,w \in\mathbb{R}^n\) we have

\begin{equation} \nonumber \vert\langle v,w\rangle\vert \leq \Vert v\Vert ~~ \Vert w\Vert, \end{equation}thus,

\begin{equation} \nonumber (\sum_{i=1}^{n} v_{i} w_{i}) ^2 \leq (\sum_{i=1}^{n} v_{i}^{2}) (\sum_{i=1}^{n} w_{i}^{2}) \end{equation}2.6 Spectral theorem

Let \(A\) be a real symmetric matrix. Then there exists an orthonormal basis of eigenvectors of \(A\) and the corresponding eigenvalues are real.

- Prove that all the eigenvalues of \(A\) are real.

- Find an eigenvalue \(\alpha_{n}\) and an eigenvector \(v_{n}\) of \(A\), and an orthonormal basis \(\mathcal{R}=\{u_{1},\ldots,u_{n-1}\}\) of the orthogonal complement of \(\Span(v_{n})\).

- Consider the matrix \(R\) having as columns the elements of \(\mathcal{R}\) and construct the \((n-1)\times (n-1)\) symmetric matrix \(B\) that has as entries the coefficients to obtain the vectors \(Au_{i}\) as a linear combination of elements in \(\mathcal{R}\).

- Inductively obtain the orthonormal basis of eigenvectors for \(B\), \(v_{1}'\ldots,v_{n-1}'\).

- The orthonormal basis of eigenvectors of \(A\) is obtained by normalizing the orthogonal basis of eigenvectors of \(A\) \(Rv_{1}',\ldots,Rv_{n-1}',v_{n}\).

We begin by showing that all the eigenvalues of \(A\) are real and then we proceed to show how to find the orthonormal basis of eigenvectors of \(A\) by induction.

Consider the polynomial \(p(\lambda)=\det(A-\lambda I)\). This polynomial has \(n\) roots, some may be complex. Let us begin by showing that all roots are real.

If \(u\) and \(v\) are eigenvectors of \(A\) associated to different eigenvalues, say \(\lambda_{u}\) and \(\lambda_{v}\) respectively, then \(u\) and \(v\) are orthogonal.

We have that \(\lambda_{v}u^{\intercal}v= u^{\intercal}(Av)=u^{\intercal}Av=(Au)^{\intercal}v=\lambda_{u}u^{\intercal}v\). Therefore \((\lambda_{v}-\lambda_{u})u^{\intercal}v=0\), and since \(\lambda_{v}\neq \lambda_{u}\) it follows that \(u^{\intercal}v=0\).

Now, let \(\lambda\) be a root of \(p\) and let \(v\) be an eigenvector associated to \(\lambda\). Then \(Av=\lambda v\). Taking complex conjugate on bot sides yields \(A\bar{v}=\bar{\lambda}\bar{v}\). So \(\bar{v}\) is an eigenvector of \(A\) with eigenvalue \(\bar{\lambda}\). Since \(v\neq 0\) we have that \(v^{\intercal}\bar{v}>0\). Thus by the previous claim it follows that the associated eigenvalues must be equal, that is, \(\lambda=\bar{\lambda}\). Therefore \(\lambda\in \mathbb{R}\).

We now know all eigenvalues of \(A\) are real. Let \(\alpha_{n}\) be one such eigenvalue. Before constructing the orthonormal basis of eigenvectors let us prove the following claim.

If \(U\) is an \(A\)-invariant subspace, then \(U^{\perp}\) is also an \(A\)-invariant subspace.

Let \(v\in U^{\perp}\) and \(u\in U\). Then \(u^{\intercal}(Av)=(Au)^{\intercal}v=(u')^{\intercal}v=0\). Thus \(Av\) is orthogonal to any \(u\in U\) and so \(Av\in U^{\perp}\), as desired.

Now, let \(v_{n}\) be an eigenvector associated to the eigenvalue \(\alpha_{n}\) and let \(U_{n}=\text{span}(v_{n})\). Observe that \(U_{n}\) is \(A\)-invariant. By the previous claim it follows that \(U_{n}^{\perp}\) is also \(A\)-invariant. Let \(u_{1},\ldots,u_{n-1}\) be an orthonormal basis of \(U_{n}^{\perp}\) and let \(R=[u_{1} \dots u_{n-1}]\). Then \(AR=[Au_{1} \dots Au_{n-1}]\), and since \(U_{n}^{\perp}\) is \(A\)-invariant we have that \(Au_{i}=\sum_{j\in [n-1]}b_{j,i}u_{j}\). So

\begin{equation} \nonumber AR=R \left[\begin{array}{rrrr} b_{1,1} & b_{1,2} & \dots & b_{1,n-1} \\ b_{2,1} & b_{2,2} &\dots & b_{2,n-1} \\ \vdots & \vdots & & \vdots\\ b_{n-1,1} & b_{n-1,2} & \dots & b_{n-1,n-1} \end{array}\right]= RB. \end{equation}Multiplying both sides by \(R^{T}\) and using the fact that \(u_{1},\ldots,u_{n-1}\) is an orthonormal basis we obtain \(R^{\intercal}AR=B\).

We claim that \(B\) is a symmetric matrix. Indeed since,

\begin{equation} \nonumber \begin{split} B_{i,j}&=u_{i}^{\intercal}Au_{j}\\ &=(Au_{j})^{\intercal}u_{i}\\ &=u_{j}^{\intercal}Au_{i}\\ &= B_{j,i}. \end{split} \end{equation}Thus \(B\) is an \((n-1)\times (n-1)\) real symmetric matrix. By induction hypothesis we have that \(B\) admits an orthonormal basis of eigenvectors \(v_{1}',\ldots,v_{n-1}'\) associated to eigenvalues \(\beta_{1},\ldots,\beta_{n-1}\). In particular \(Bv_{i}'=\beta_{i}v_{i}'\), which implies that \(R^{\intercal}ARv_{i}'=\beta_{i}v_{i}'\), or \(A(Rv_{i}')=\beta_{i}(R v_{i}')\). That is \(v_{i}\mathrel{\mathop:}=Rv_{i}'\) is an eigenvector of \(A\) with associated eigenvalue \(\beta_{i}\). Note that \(v_{n}\neq v_{i}\) for \(1\leq i\leq n-1\) since \(v_{i}\in U_{n}^{\perp}\) by the previous claim. Therefore \(\mathcal{B}=\{v_{1},\ldots,v_{n} \}\) is an orthogonal basis of eigenvectors of \(A\). The desired orthonormal basis can now be obtained by normalizing \(\mathcal{B}\).

Application: Power of matrix Let \(L\) be a matrix whose columns form an orthonormal basis of eigenvectors of \(A\). Then \(AL = LD\), where \(D\) is the diagonal matrix of eigenvalues. Thus, \(A = LDL^{-1} = LDL^T\).

We may compute the power \(A^k\) by multiplying the above \(k\) times to get \((LDL^T) (LDL^T) \dots (LDL^T) = L D^k L^T\). Note that \(D^k\) is easily computed: replace each entry \(\alpha\) of \(D\) by its power \(\alpha^k\).

Application: Eigen decomposition

Let \(\{v_1,\dots,v_n\}\) be an orthonormal basis of eigenvectors.

It can be seen that \(I = v_1v_1^T + v_2v_2^T + \dots + v_nv_n^T\).

Hence, we have \(Ax = A (v_1v_1^T + v_2v_2^T + \dots + v_nv_n^T) x = ( \lambda_1 v_1v_1^T + \lambda_2 v_2v_2^T + \dots + \lambda_n v_nv_n^T ) x\). Thus, \(A = \lambda_1 v_1v_1^T + \lambda_2 v_2v_2^T + \dots + \lambda_n v_nv_n^T\).

Assume \(\lambda_i\neq 0, \forall i\). Then we have, \(A^{-1} = \frac{1}{\lambda_1} v_1v_1^T + \frac{1}{\lambda_2} v_2v_2^T + \dots + \frac{1}{\lambda_n} v_nv_n^T\) because

\begin{equation} \nonumber \begin{split} ( \lambda_1 v_1v_1^T + \lambda_2 v_2v_2^T + \dots + \lambda_n v_nv_n^T ) ( \frac{1}{\lambda_1} v_1v_1^T + \frac{1}{\lambda_2} v_2v_2^T + \dots + \frac{1}{\lambda_n} v_nv_n^T ) &= (v_1v_1^T + v_2v_2^T + \dots + v_nv_n^T)\\ &= I. \end{split} \end{equation}2.7 Useful & Simple Inequalities

The following inequalities are useful; most of them are simple.

- For two real numbers \(\alpha, \beta\), we have \((\alpha + \beta)^2 \le 2 (\alpha ^2 + \beta ^2)\).

- For two integers \(\alpha, \beta \in \{-1,0,+1\}\), we have \((\alpha + \beta)^2 \le 2 |\alpha + \beta|\).

- Let \(\alpha_1,\beta_1\), \(\alpha_2,\beta_2\), …, \(\alpha_k,\beta_k\), be \(k\) pairs of nonnegative real numbers. Then we have

- Let \(x\in\mathbb{R}^{n}\) satisfy \(x \perp \mathbf{1}\) (thus, \(\sum_i x_i=0\)). Then \(\sum_{i\lt j} (x_i-x_j)^2 = n \sum_i x_i^2\).

2.8 References

This parts of the notes closely follows the expositions by Lau (week 1 and [[][]).

3 Matrices associated to graphs and their spectra

3.1 Matrices associated to graphs

Given a graph \(G\), there are several matrices we can associate to \(G\). Before stating them let us define \(D=\text{diag}(d_{1},\ldots,d_{n})\), where \(d_{i}\) denotes the degree of vertex \(i\). We can associate the following matrices to \(G\).

- The adjacency matrix \(A\). This is an \(n\times n\) \(01\)-matrix. The \(i,j\)-entry of \(A\), \(A_{i,j}\) is \(1\) if \(ij\in E\) and \(0\) otherwise.

- The normalized adjacency matrix \(\mathcal{A}\). This matrix is defined to be \(\mathcal{A}\deff D^{-1/2}AD^{-1/2}\).

- The Laplacian matrix \(L\). Here \(L\deff D-A\).

- The normalized Laplacian matrix \(\mathcal{L}\). This \(n\times n\) matrix is defined as \(\mathcal{L}\deff D^{-1/2}LD^{-1/2}=I-\mathcal{A}\).

Recall that the trace of a matrix \(M\) is defined to be the sum of its diagonal entries, that is, \(\trace(M)=\sum_{i=1}^{n}M_{i,i}\).

Let \(M\) be an \(n\times n\) matrix with eigenvalues \(\mu_{1},\ldots,\mu_{n}\). Then \(\trace(M)=\sum_{i=1}^{n}\mu_{i}\).

3.2 Spectra of the adjacency matrices of \(K_n\) and \(K_{m,n}\)

Let us begin by determining the spectrum of the adjacency matrix \(A\) for a couple of very specific kinds of graphs.

- Let \(G=K_{n}\), that is, let \(G\) be the complete graph on \(n\) vertices. In this case we have that \(A=J-I\), where \(J\) is the \(n\times n\) matrix with all entries equal to 1. Observe that \(I\) has \(1\) as an eigenvalue with multiplicity \(n\). Now, since \(J\) has rank \(1\), it follows that \(0\) is an eigenvalue of \(J\) with multiplicity \(n-1\). Finally, observe that \(\onesv\) is an eigenvector of \(J\) with eigenvalue \(n\). Thus \(J\) has as eigenvalues \(0\) with multiplicity \(n-1\) and \(n\) with multiplicity \(1\). This implies that \(A\) has eigenvalues \(-1\) with multiplicity \(n-1\) and \(n-1\) with multiplicity \(1\).

- Let \(G=K_{m,n}\), the complete bipartite graph with partite

sets \(X\) and \(Y\). We may assume, without loss of generality, that

\(X=\{1,\ldots,m\}\) and \(Y=\{m+1,\ldots,m+n\}\). Thus

\begin{equation*}

A= \left[\begin{array}{rr}

0& J_{m,n} \\

J_{m,n}^{\intercal} & 0 \\

\end{array}\right],

\end{equation*}

where \(J_{m,n}\) is the \(m\times n\) all ones matrix. Note that

\(\rank(A)=0\), so \(0\) is an eigenvalue of \(A\) with multiplicity \(n-2\). Now, since the trace of \(A\) is \(0\), we have that the sum of the eigenvalues of \(A\) is \(0\). Thus the two remaining eigenvalues, call them \(\alpha_{1}\) and \(\alpha_{2}\), must satisfy \(\alpha_{1}=-\alpha_{2}\). So far we know that

\begin{equation*} \begin{split} \det(A-\alpha I) &= \alpha^{m+n-2}(\alpha-\alpha_{1})(\alpha-\alpha_{2})\\ &= \alpha^{m+n-2}(\alpha^{2}-\alpha_{1}^{2})\\ &= \alpha^{m+n}-\alpha_{1}^{2}\alpha^{m+n-2}\\ &= \alpha^{m+n}-x\alpha^{m+n-2}, \end{split} \end{equation*}where \(x=\alpha_{1}^{2}\). By computing the determinant of \(\alpha I-A\) we can observe that for each edge of \(G\) there is exactly one \(\alpha^{m+n-2}\). Since there are precisely \(mn\) such terms this implies that the coefficient of \(\alpha^{m+n-2}\) is \(mn\), that is \(x=mn\). Therefore \(\alpha_{1}=\sqrt{mn}\) and \(\alpha_{2}=-\sqrt{mn}\). Thus \(K_{m,n}\) has eigenvalues \(\sqrt{mn}\) and \(-\sqrt{mn}\) with multiplicity \(1\), and \(0\) with multiplicity \(m+n-2\).

3.3 A characterization of bipartiteness

Recall that the spectrum of the adjacency matrix of the complete bipartite graph \(K_{m,n}\) is symmetric about \(0\). In fact, as we will see in the following result, this property charecterizes bipartite graphs.

Let \(G\) be a graph. Then \(G\) is bipartite if and only if for any nonzero eigenvalue \(\alpha\) of \(A\), \(-\alpha\) is also an eigenvalue of \(A\).

Let us begin by assuming \(G\) is bipartite, with partite sets \(X\) and \(Y\). Without loss of generality we may assume that \(X=\{1,\ldots,k\}\) and \(Y=\{k+1,\ldots,n\}\). Thus

\begin{equation*} A= \left[\begin{array}{rr} 0& B \\ B^{\intercal} & 0 \\ \end{array}\right], \end{equation*}where \(B\) is some \(k\times n-k\) \(01\)-matrix. Let \(\alpha\) be an eigenvalue of \(A\) with eigenvector \(z=[x,y]^{\intercal}\). Then

\begin{equation*} \left[\begin{array}{r} By \\ B^{\intercal}x \\ \end{array}\right] = \left[\begin{array}{rr} 0& B \\ B^{\intercal} & 0 \\ \end{array}\right] \left[\begin{array}{r} x \\ y \\ \end{array}\right] \\ = Az\\ = \alpha z\\ = \left[\begin{array}{r} \alpha x \\ \alpha y \\ \end{array}\right]. \end{equation*}So \(By=\lambda x\) and \(B^{\intercal}x=\lambda y\). We now have that

\begin{equation*} \left[\begin{array}{rr} 0& B \\ B^{\intercal} & 0 \\ \end{array}\right] \left[\begin{array}{r} x \\ -y \\ \end{array}\right] = \left[\begin{array}{r} -By \\ B^{\intercal}x \\ \end{array}\right] = -\alpha \left[\begin{array}{r} x \\ -y \\ \end{array}\right] \end{equation*}So \([x,-y]^{\intercal}\) is an eigenvector of \(A\) with eigenvalue \(-\alpha\). Note that \(k\) linearly independent eigenvectors associated to \(\lambda\) would yield \(k\) linearly independent eigenvectors associated to \(-\lambda\).

Let us now prove the converse implication. It can be shown by induction that the \(i,j\) entry of \(A^{k}\) is equal to the number of \(ij\)-walks of length \(k\) in \(G\). This implies that the entries of \(A^{k}\) are nonnegative. In particular \(A^{k}_{i,i}\geq 0\) for all \(i\in [n]\). We use this observation together with the fact that nonzero eigenvalues come in positive negative pairs to show that \(G\) is bipartite.

Note that if \(\alpha_{1},\ldots,\alpha_{n}\) are the eigenvalues of \(A\), then \(\alpha_{i}^{k},\ldots,\alpha_{n}^{k}\) are the eigenvalues of \(A^{k}\). THus, for odd \(k\) we have that \(\trace(A^{k})=\sum_{i\in [n]}\alpha_{i}^{k}=0\), and since \(A^{k}_{i,i}\geq 0\), it follows that \(A^{k}_{i,i}=0\) for all \(i\in [n]\). That is, \(G\) contains no \(ii\)-walks of odd length \(k\), in particular \(G\) contains no odd cycles. Therefore \(G\) is bipartite.

3.4 Positive semidefinite matrices and the Laplacian

Let us now turn our attention to the spectrum of the Laplacian matrix \(L\) of a graph \(G\). Recall that \(L\deff D-A\), where \(D\) is the diagonal matrix with \(D_{i,i}\) being the degree of vertex \(i\) and \(A\) is the adjacency matrix of \(G\).

Note that if we add the entries of a row in \(L\) we obtain \(0\), since \(d_{i}\) will cancel out with the \(d_{i}\) \(-1\)'s that are off the diagonal in that row. This implies that \(L\onesv =\zerov\), or that \(\onesv\) is an eigenvector with eigenvalue \(0\). We will now see that \(0\) is the smallest eigenvalue of \(L\). Let \(e=ij\) and let \(b_{e}\) be the \(n\times 1\) column vector with entry \(i\) being \(1\), entry \(j\) being \(-1\) and \(0\) elsewhere. It is left as an exercise to the reader to show that if \(B=[b_{e_{1}}\dots b_{e_{m}}]\) is the \(n\times m\) matrix with columns given by the \(b_{e}\) vectors defined above, then \(L=B B^{\intercal}\).

We need to introduce one more concept and prove one more lemma before we are able to show that \(0\) is the smallest eigenvalue of \(L\).

A real symmetric matrix is said to be positive semidefinite if \(x^{\intercal}Mx\geq0\) for all \(x\in \R^{n}\).

Let \(M\) be a real symmetric matrix. The following are equivalent

- \(M\) is positive semidefinite.

- All the eigenvalues of \(M\) are nonnegative.

- \(M=BB^{\intercal}\) for some matrix \(B\).

We begin by making an observation for real symmetric matrices. Since \(M\) is symmetric, it is similar to a diagonal matrix \(S\) having the eigenvalues of \(M\) in its diagonal. Furthermore \(M=RSR^{\intercal}\) with the columns of \(R\) defining an orthonormal basis of eigenvectors of \(M\).

Let us show that 1 implies 2. Since \(M\) is positive semidefinite taking \(x=Re_{i}\), with \(e_{i}\) being the \(i\)-th standard basis vector, we obtain that

\begin{equation*} 0\leq x^{\intercal}Mx=(Re_{i})^{\intercal}MRe_{i}=e_{i}^{\intercal}Se_{i}=S_{i,i}. \end{equation*}So \(S_{i,i}\geq 0\) for all \(i\in [n]\).

Now, we show that 2 implies 3. Since all entries of \(S\) are nonnegative and since \(S\) is diagonal we have that

\begin{equation*} M=RSR^{\intercal}=RS^{1/2}S^{1/2}R^{\intercal}=(S^{1/2}R^{\intercal})^{\intercal}S^{1/2}R^{\intercal}=B^{\intercal}B, \end{equation*}as desired.

Finally let us show that 3 implies 1. If \(M=BB^{\intercal}\), then

\begin{equation*} x^{\intercal}Mx=x^{\intercal}BB^{\intercal}x=(B^{\intercal}x)^{\intercal}(B^{\intercal}x)=\Vert B^{\intercal} x\Vert^{2}\geq 0, \end{equation*}for all \(x\in \R^{n}\). Thus \(M\) is positive semidefinite.

3.5 Connectedness and the Laplacian matrix

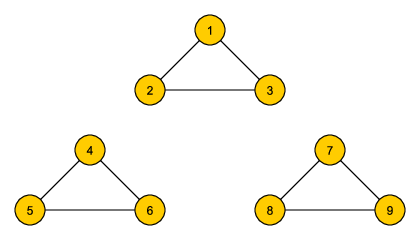

We have established that \(0\) is the smallest eigenvalue of the Laplacian matrix of a graph. In the following result we explore the relation between the eigenvalue of the Laplacian \(0\) and the number of connected components of a graph.

A graph is connected if and only of \(0\) is an eigenvalue of \(L\) with multiplicity \(1\).

If \(G\) is not connected, then we can partition its vertex set into two sets \(S_{1}\) and \(S_{2}\) such that no edge has an endpoint in both \(S_{1}\) and \(S_{2}\). Suppose without loss of generality that \(S_{1}=\{1,\ldots,k\}\) and \(S_{2}=\{k+1,\ldots,n\}\). Let \(\chi_{S_{1}}=[\underbrace{1,\ldots,1}_{k},\underbrace{0,\ldots,0}_{n-k}]\) and let \(\chi_{S_{2}}=\onesv-\chi_{S_{1}}\). Observe that \(L\chi_{S_{1}}=L\chi_{S_{2}}=\zerov\), so \(\chi_{S_{1}}\) and \(\chi_{S_{2}}\) are eigenvectors of \(L\) associated to eigenvalue \(0\). Thus \(0\) has multiplicity at least two.

Now, suppose \(G\) is connected and consider \(x^{\intercal}Lx=\sum_{ij\in E}(x_{i}-x_{j})^{2}\geq0\). If \(x\) is an eigenvector associated to \(0\), then \(Lx=\zerov\), so \(x^{\intercal}Lx=0\). This implies \(x_{i}=x_{j}\) for every edge \(ij\). Since \(G\) is connected, the value for a given \(x_{j}\) propagates to all vertices of \(G\). Thus \(x=c\onesv\), that is, any eigenvector is a multiple of \(\onesv\). Therefore the eigenvalue \(0\) has multiplicity \(1\).

3.6 Courant-Fischer inequality

For a real symmetric matrix \(A\) we define the Rayleigh quotient

\begin{equation} \nonumber R(x)\mathrel{\mathop:} = \frac{x^{\intercal}Ax}{x^{\intercal}x} = \frac{\sum_{i,j}a_{i,j}x_{i}x_{j}}{\sum_{i}x_{i}^{2}}. \end{equation}Suppose \(A\) has eigenvalues \(\alpha_{1}\geq \alpha_{2}\geq \ldots \geq \alpha_{n}\) with corresponding orthonormal eigenvectors \(v_{1},\ldots,v_{n}\).

The largest eigenvalue of \(A\) can be expressed as

\begin{equation} \nonumber \alpha_{1}=\max_{x\neq 0}\,R(x). \end{equation}Let \(x\in\mathbb{R}^{n}\) with \(x\neq 0\), then \(x=a_{1}v_{1}+\dots+a_{n}v_{n}\). So

\begin{equation} \label{eq:precourant-numerator} \begin{split} x^{\intercal}Ax &= (a_{1}v_{1}+\dots+a_{n}v_{n})^{\intercal}A(a_{1}v_{1}+\dots+a_{n}v_{n})\\ &=\sum_{i\in[n]}a_{i}^{2}v_{i}^{\intercal}Av_{i}+2\sum_{i\neq j}a_{i}a_{j}v_{i}^{\intercal}Av_{j}\\ &=\sum_{i\in[n]}\alpha_{i}a_{i}^{2} \end{split} \end{equation}Note that \(x^{\intercal}x=(a_{1}v_{1}+\dots+a_{n}v_{n})^{\intercal}(a_{1}v_{1}+\dots+a_{n}v_{n})=\sum_{i\in[n]}a_{i}^{2}\).

Therefore

\begin{equation} \nonumber R(x)=\frac{\sum_{i\in[n]}\alpha_{i}a_{i}^{2}}{\sum_{i\in[n]}a_{i}^{2}}\leq \alpha_{1}\frac{\sum_{i\in[n]}a_{i}^{2}}{\sum_{i\in[n]}a_{i}^{2}}=\alpha_{1}. \end{equation}Thus \(R(x)\leq \alpha_{1}\) for all \(x\neq 0\) and taking \(x=v_{1}\) we obtain \(R(v_{1})=\alpha_{1}\) which concludes the proof.

Let \(T_{k}\) denote the orthogonal complement of the subspace spanned by \(v_{1},\ldots,v_{k-1}\), that is, \(T_{k}=\Span(v_{1},\ldots,v_{k-1})^{\perp}\). Then

\begin{equation} \nonumber \alpha_{k}=\max_{x\in T_{k}}R(x). \end{equation}Let \(x\in T_{k}\) and write \(x=a_{1}v_{1}+\dots +a_{n}v_{n}\). Note that \(x^{\intercal}v_{i}=a\Vert v_{i}\Vert^{2}=a_{i}\). Since \(x\in T_{k}\) it follows that \(a_{i}=x^{\intercal}v_{i}=0\) for \(1\leq i\leq k-1\). Thus \(x=a_{k}v_{k}+\dots+a_{n}v_{n}\).

Now \(x^{\intercal}Ax=\sum_{i=k}^{n}a_{i}^{2}\alpha_{i}\), by a similar reasoning as in \eqref{eq:precourant-numerator}. Also \(x^{\intercal}x=\sum_{i=k}^{n}a_{i}^{2}\). Therefore we have that

\begin{equation} \nonumber R(x)=\frac{\sum_{i=k}^{n}a_{i}^{2}\alpha_{i}}{\sum_{i=k}^{n}a_{i}^{2}}\leq \alpha_{k} \frac{\sum_{i=k}^{n}a_{i}^{2}}{\sum_{i=k}^{n}a_{i}^{2}} =\alpha_{k}. \end{equation}Thus \(R(x)\leq \alpha_{k}\) for all \(x\in T_{k}\) and taking \(x=v_{k}\) we obtain \(R(v_{k})=\alpha_{k}\), as desired.

Note that the previous result allows us to obtain \(\alpha_{k}\) provided we know \(T_{k}\). The following result, the Courant-Fischer Theorem, gives a characterization of \(\alpha_{k}\) that does not depend on knowing \(T_{k}\) and it can be used for providing bounds for the eigenvalues of a matrix.

For a real symmetric matrix \(A\) we have that the \(k\)-th largest eigenvalue \(\alpha_{k}\) is given by

\begin{equation} \nonumber \alpha_{k}=\max_{\substack{S\subseteq \R^{n}\\ \dim(S)=k}}\min_{x\in S}\, R(x) = \min_{\substack{S\subseteq \R^{n}\\ \dim(S)=n+k-1}} \max_{x\in S}\, R(x). \end{equation}We only prove the \(\max\)-\(\min\) relation, the \(\min\)-\(\max\) relation can be proved by a similar argument. Let \(S_{k}=\Span(v_{1},\ldots,v_{k})\) and let \(x\in S_{k}\). Write \(x=a_{1}v_{1}+\dots+a_{k}v_{k}\). Following a similar reasoning as in the proofs of the previous two lemmas we have that

\begin{equation} \nonumber R(x)= \frac{\sum_{i\in[k]}\alpha_{i}a_{i}^{2}}{\sum_{i\in[k]}a_{i}^{2}} \geq \alpha_{k}\frac{\sum_{i\in[k]}a_{i}^{2}}{\sum_{i\in[k]}a_{i}^{2}}=\alpha_{k}. \end{equation}So

\begin{equation} \nonumber \max_{\substack{S\subseteq \R^{n}\\ \dim(S)=k}}\min_{x\in S}\, R(x) \geq \min_{x\in S_{k}}\, R(x)\geq \alpha_{k}. \end{equation}Now, any \(k\) dimensional vector space \(S\) intersects the \(n-k+1\) dimensional vector space \(T_{k}=\Span(v_{k},\ldots,v_{n})\). By the previous lemma we have that \(\alpha_{k}=\max_{x\in T_{k}}\, R(x)\), therefore

\begin{equation} \nonumber \max_{\substack{S\subseteq \R^{n}\\ \dim(S)=k}}\min_{x\in S}\, R(x) \leq \max_{\substack{S\subseteq \R^{n}\\ \dim(S)=k}}\min_{x\in S\cap T_{k}}\, R(x) \leq \alpha_{k.} \end{equation}Putting the last two inequalities together yields the first part of the result.

4 Cheeger's Inequality

4.1 Cheeger's Inequality (Laplacian)

In this section we study Cheeger's inequality, which provides a bound on how well connected a graph is in terms of the second smallest eigenvalue of its Laplacian matrix.

We have previously seen that a graph \(G\) is disconnected if and only if its second smallest eigenvalue \(\lambda_{2}\) is \(0\). Informally, Cheeger's inequality states that the second smallest eigenvalue is close to \(0\) if and only if there is a sparse cut in \(G\). Let us now formalize this by introducing the concept of conductance.

A measure of connectedness of a graph \(G=(V,E)\) is its conductance. The conductance of a nonempty set \(S\subseteq V\) is defined to be

\begin{equation} \nonumber \frac{e(S,V-S)}{\min\{|V|,|V-S|\}}. \end{equation}The conductance of the graph \(G\), \(\Phi\), is defined to be the minimum of the conductances over all nonempty subsets of \(V\), in symbols

\begin{equation} \nonumber \Phi = \min_{\emptyset\neq S\subseteq V}\frac{e(S,V-S)}{\min\{|V|,|V-S|\}}. \end{equation}Let \(G=(V,E)\) be an undirected graph, and let \(\Phi = \min_{\emptyset\not=S\subsetneq{V}} \frac{e(S,V-S)}{\min \{ |S|, |V-S|\}}\) denote its conductance; also, let \(d_{\max}\) denote the maximum of the degree of the nodes. Let \(\lambda_2\) denote the second smallest eigenvalue of the Laplacian matrix \(L(G)\). Then,

\begin{equation} \nonumber \frac{\lambda_{2}}{2} \leq \Phi \leq \sqrt{2\lambda_{2}d_{\max}} \end{equation}(Follows Kelner's course notes for MIT:18.409:F2009)

Lowerbound (Easy):

Let \(S\subsetneq{V}\) (nonempty) denote a cut \((S,V-S)\) that defines \(\Phi\). We proceed by using the fact that \(|S|\cdot|V-S|\geq n/2\min\{|S|,|V-S|\}\) and then showing that the resulting quotient is at least as big as the minimum of all quotients when restricted to the vectors orthogonal to \(\mathbb{1}\), which by the Courant-Fischer inequality is precisely \(\lambda_{2}\).

Then

\begin{equation} \nonumber \begin{split} \Phi &= \frac{ e(S,V-S) }{\min\{|S|,|V-S|\}} \geq (n/2) \frac{ e(S,V-S) }{ |S| |V-S| } \\ &= (n/2) \frac{ \sum_{ij\in E} (x_i-x_j)^2 } { \sum_{i\lt j} (x_i-x_j)^2 } \qquad (\mbox{taking $x = \chi^{S}-\chi^{V-S}$}) \\ & \geq (n/2) \min_{x\in \mathbb{R}^n,\; x\perp\mathbf{1}} \frac{ \sum_{ij\in E} (x_i-x_j)^2 } { \sum_{i\lt j} (x_i-x_j)^2 } \\ &=~ (n/2) \min_{x\in \mathbb{R}^n,\; x\perp\mathbf{1}} \frac{ \sum_{ij\in E} (x_i-x_j)^2 } { n \sum_{i} x_i ^2 } \\ &=~ \frac12 \lambda_2. \end{split} \end{equation}Upperbound (NotEasy – follows Spielman~F98, Kelner~F09):

For a vector \(y\in\mathbb{R}^n\), define \(R(y) = \frac{ y^T L y }{ y^T y }\), the Rayleigh quotient of \(y\).

Goal: for any vector \(x \perp\mathbf{1}\), we have \(R(x) \geq \frac{\Phi^2}{ 2d_{\max} }\), in other words, \(\Phi \leq \sqrt{ 2 d_{\max} R(x) }\).

(Aside: Since \(\lambda_2 = \min_{x\in \mathbb{R}^n,\; x\perp\mathbf{1}} R(x)\), we will get \(\lambda_2 \geq \frac{\Phi^2}{ 2d_{\max} }\).)

We prove something stronger:

Index the nodes s.t. \(x_1 \le x_2 \dots \le x_n\).

Assume \(n\) is odd, and let \(m\) denote the "median index", \(m = (n+1)/2\).

Define the canonical cuts for \(i=1,\dots,n\) by \((S_i,\overline{S_i})\) where \(S_i\) is defined as follows: () if \(i\leq m\), then \(S_i = \{1,\dots,i\}\), otherwise () (so, \(i>m\)) \(S_i = \{ i, i+1, i+2, \dots, n \}\).

Then, for the ``best'' of the canonical cuts, we have \(\Phi \leq e(S_i,\overline{S_i})/|S_i| \leq \sqrt{ 2 d_{\max} R(x) }\).

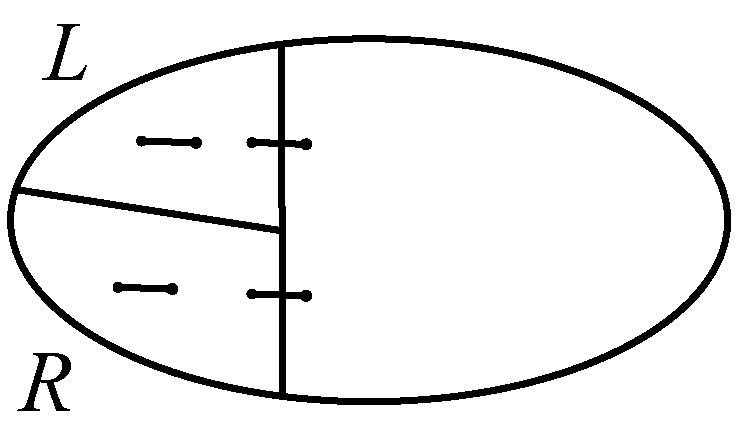

Preprocess to split \(G\) into left-subgraph and right-subgraph with common node \(m\)

Define \(y = x - x_m \mathbf{1}\), thus \(y_i = x_i - x_m, \forall i\).

Claim: \(R(x) \geq R(y)\).

Proofsketch: For the numerators (top part) we have \(x^T L x = \sum_{ij\in E} (x_i-x_j)^2 = y^T L y\). For the denominators (bot part) we have \(x^T x \leq y^T y = (x - x_m \mathbf{1})^T (x - x_m \mathbf{1}) = x^Tx + n x_m^2 - 2x_m (x^T\mathbf{1}) = x^Tx + n x_m^2\) (since \(x\perp\mathbf{1}\)).

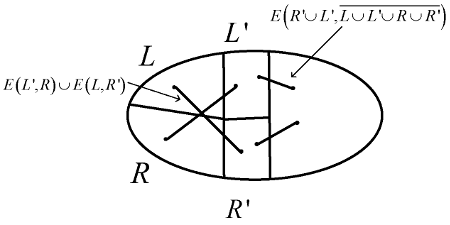

Next, transform \(G\) to \(G^{new} = (V, E^{new})\) by replacing each edge \(ij\) in the canonical cut \((S_m,\overline{S_m})\) by the two edges \(im, mj\); i.e., subdivide each edge in this cut by a new node and identify all the new nodes with \(m\); both \(V\) and \(y\) stay the same.

Claim: \(R(y, G) \geq R(y, G^{new})\).

Proofsketch: Consider the contributions from a transformed edge \(ij\) to the numerators (top part); we have, \((y_j - y_i)^2 = ((y_j - y_m) + (y_m - y_i))^2 \geq (y_j - y_m)^2 + (y_m - y_i)^2\).

Let the left subgraph \(G_L = (V_L, E_L)\) be the subgraph induced by \(V_L = \{1,\dots,m\}\).

Let the right subgraph \(G_R = (V_R, E_R)\) be the subgraph induced by \(V_R = \{m,m+1,\dots,n\}\).

Since \(y_m=0\) (this explains the choice of \(y\)), we have (for numerator) \(y^T L(G^{new}) y = \sum_{ij\in E_L} (y_i-y_j)^2 ~~+~~ \sum_{ij\in E_R} (y_i-y_j)^2\), and (for denominator) \(y^T y = \sum_i y_i^2 = (\sum_{i\in V_L} y_i^2) + (\sum_{i\in V_R} y_i^2)\).

Thus

\begin{equation} \nonumber \begin{split} R(y, G^{new}) &= \frac{ y^T L(G^{new}) y }{y^T y}\\ &=\frac{ \sum_{ij\in E_L} (y_i-y_j)^2 + \sum_{ij\in E_R} (y_i-y_j)^2 } { \sum_{i\in V_L} y_i^2 + \sum_{i\in V_R} y_i^2 }\\ &\geq \min \{ \frac{ \sum_{ij\in E_L} (y_i-y_j)^2 }{\sum_{i\in V_L} y_i^2}, \frac{ \sum_{ij\in E_R} (y_i-y_j)^2 }{\sum_{i\in V_R} y_i^2} \} \\ &= \min\{ R(y, G_L), R(y, G_R) \}. \end{split} \end{equation}We claim that each of \(R(y, G_L)\) and \(R(y, G_R)\) is \(\geq \frac{\Phi^2 }{2d_{\max}}\).

Plan for this part:

Key Lemma (Kelner):

Consider \(z \in \mathbb{R}^{V_L}_{-}\) and index the nodes s.t. \(z_1 \le z_2 \le \dots \le z_m = 0\). Then \(\sum_{ij\in E_L} |z_i - z_j| \ge \Phi(G) \sum_i |z_i|\).

Scale \(y\) s.t. \(\sum_{i\in V_L} y_i^2 = 1\).

Apply Cauchy-Schwarz inequality to get

\begin{equation} \nonumber \sum_{ij\in E_L} |y_i^2 - y_j^2| ~~=~~ \sum_{ij\in E_L} |y_i - y_j|~~|y_i + y_j| ~~\leq~~ \sqrt{\sum_{ij\in E_L} (y_i-y_j)^2} ~~ \sqrt{\sum_{ij\in E_L} (y_i+y_j)^2}. \end{equation}Thus

\begin{equation} \nonumber {\sum_{ij\in E_L} (y_i-y_j)^2} ~~\geq~~ \frac{ ( \sum_{ij\in E_L} |y_i^2 - y_j^2| )^2 }{ \sum_{ij\in E_L} (y_i+y_j)^2} \geq \frac{ (\Phi(G) \sum_{i\in V_L} y_i^2)^2 }{ 2 d_{\max} \sum_{i\in V_L} y_i^2 } ~~=~~ \frac { \Phi(G)^2 } { 2 d_{\max} }, \end{equation}where we used our assumption \(\sum_{i\in V_L} y_i^2 = 1\) for the last equation.

4.2 Cheeger's inequality (Normalized Laplacian)

In this section we prove a result which is analogous to that shown in the previous section. Here the result applies to the normalized laplacian \(\mathcal{L}\). Recall that for a graph \(G\) the normalized Laplacian is defined to be \(D^{-1/2}LD^{-1/2}\). By using the normalized Laplacian instead of the Laplacian matrix we remove the dependency on the maximum degree of \(G\) for the upper bound in Cheeger's inequality.

The conductance \(\hat{\Phi}(S)\) of a set \(\emptyset \neq S\subseteq V(G)\) is defined as

\begin{equation*} \hat{\Phi}(S)=\frac{e(S,V-S)}{\min\{\deg(S),\deg(V-S)\}}. \end{equation*}The conductance \(\hat{\Phi}(G)\) of a graph \(G\) is defined to be

\begin{equation*} \hat{\Phi}(G)=\min_{\emptyset \neq S\subseteq V}\hat{\Phi}(S)= \min_{\emptyset \neq S\subseteq V} \frac{e(S,V-S)}{\min\{\deg(S),\deg(V-S)\}}. \end{equation*}The main result of this section is the following.

- If \(y=D^{-1/2}x\), then

\[\lambda_{2}= \min_{y\perp D^{1/2}\mathbb{1}}R(y),\qquad\text{where}\qquad R(y)\mathrel{\mathop:}=\frac{\sum_{ij\in E}(y_{i}-y_{j})^{2}}{\sum_{i\in V}d_{i}y_{i}^{2}}.\nonumber\]

- To prove that \(\frac{\lambda_{2}}{2} \leq \hat{\Phi}(G)\) we simply take \(y\in\mathbb{R}^{n}\) with \(y_{i}=\frac{1}{\deg(S)}\) if \(i\in S\) and \(y_{i}=-\frac{1}{\deg{V-S}}\) if \(i\not\in S\), where \(S\) is a set of small conductance. We then analyze \(R(y)\) to obtain the desired result.

- Let \(c\in\mathbb{R}\) be such that \(\deg(\{i: y_{i}\lt c\})\leq m\) and \(\deg(\{i:y_{i}>c\})\leq m\). Then for \(z\mathrel{\mathop:}= y-c\mathbb{1}\) we have that \(R(z)\leq R(y)\). (This step is analogous to the "median index" splitting.)

- Write \(z=z^{\oplus}+z^{\ominus}\), where \(z^{\oplus}\) has only non-negative entries and \(z^{\ominus}\) has only non-positive entries. Then \(\min\{R(z^{\oplus}),R(z^{\ominus})\}\leq R(z)\).

- Find a "canonical cut" \(S\subseteq \text{supp}(z^{\oplus})\) or \(S\subseteq \text{supp}(z^{\ominus})\) that minimizes \(\frac{e(S,V-S)}{\deg(S)}\).

- Then \[\frac{e(S,V-S)}{\deg(S)}\leq \sqrt{2\min\{R(z^{\oplus}),R(z^{\ominus})\}}\leq \sqrt{2 R(z)}\leq \sqrt{2 R(y)},\nonumber\] for all \(y\perp D^{1/2}\mathbb{1}\). In particular

\[\hat{\Phi}(G)\leq\frac{e(S,V-S)}{\deg(S)}\leq \sqrt{2\lambda_{2}}. \nonumber\]

Let \(v_{1}\) be the eigenvector of \(\mathcal{L}\) associated to \(\lambda_{1}\). Recall that

\begin{equation} \label{eq:rayleigh_ev} \lambda_{2}=\min_{x\perp v_{1}} \frac{x^{\intercal}\mathcal{L}x}{x^{\intercal}x}=\min_{x\perp v_{1}}\frac{(D^{-1/2}x)^{\intercal}LD^{-1/2}x}{x^{\intercal}x}. \end{equation}Letting \(y\mathrel{\mathop:}= D^{-1/2}x\), \eqref{eq:rayleigh_ev} can be rewritten as

\begin{equation} \nonumber \lambda_{2}=\min_{y\perp D^{1/2}\mathbb{1}}\frac{y^{\intercal}Ly}{y^{\intercal}Dy}=\min_{\sum d_{i}y_{i}=0}\frac{\sum_{ij\in E}(y_{i}-y_{j})^{2}}{\sum_{i\in V}d_{i}y_{i}^{2}}. \end{equation}We use the following notation \[ \nonumber R(y)\mathrel{\mathop:}=\frac{y^{\intercal}Ly}{y^{\intercal}Dy}. \]

Define \(c\in\mathbb{R}\) to be a constant so that \(\deg(\{i: y_{i}\lt c\})\leq m\) and \(\deg(\{i:y_{i}>c\})\leq m\). Now take \(z\mathrel{\mathop:}= y-c\mathbb{1}\) and let us show that \(R(z)\leq R(y)\). Observe that \(y^{\intercal}Ly=z^{\intercal}Lz\). Let us show that \(y^{\intercal}Dy\leq z^{\intercal}Dz\). Since \(y\perp D\mathbb{1}\) we have that

\begin{equation} \nonumber z^{\intercal}Dz=y^{\intercal}Dy+c^{2}\mathbb{1}D\mathbb{1}-2y^{\intercal}D\mathbb{1}\leq y^{\intercal}Dy, \end{equation}thus \(R(z)\leq R(y)\), as claimed.

Let \(z^{\oplus}\) (\(z^{\ominus}\)) be the vector obtained from \(z\) by keeping only the positive (negative) entries and setting all other entries to \(0\). Let us show that

\begin{equation} \label{eq:cheeger_zminus_zplus} \min\{R(z^{\oplus}),R(z^{\ominus})\}\leq R(z). \end{equation}Note that \(z^{\intercal}Dz=(z^{\oplus})^{\intercal}Dz^{\oplus}+(z^{\ominus})^{\intercal}Dz^{\ominus}\). To prove our claim we show that \((z^{\oplus})^{\intercal}Lz^{\oplus}+(z^{\ominus})^{\intercal}Lz^{\ominus}\leq z^{\intercal}Lz\). Observe that that

\begin{equation} \nonumber z^{\intercal}Lz =\sum_{ij\in E}(z_{i}-z_{j})^{2}. \end{equation}We have the following

- Whenever \(z_{i}\) and \(z_{j}\) have the same sign, then \((z_{i}-z_{j})^2\) appears as a term in the expansion of \((z^{\oplus})^{\intercal}Lz^{\oplus}\) or in the expansion of \((z^{\ominus})^{\intercal}Lz^{\ominus}\).

- If \(z_{i}\) and \(z_{j}\) have opposite signs, say \(z_{j}\lt 0\lt z_{i}\). Then \((z_{i}-z_{j})^{2}\geq z_{i}^{2}+z_{j}^{2}=(z_{i}^{\oplus}-z_{j}^{\oplus})^2+(z_{i}^{\ominus}-z_{j}^{\ominus})^2\), since \(z_{j}^{\oplus}=z_{i}^{\ominus}=0\).

Therefore \[ \frac{(z^{\oplus})^{\intercal}Lz^{\oplus}+(z^{\ominus})^{\intercal}Lz^{\ominus}}{(z^{\oplus})^{\intercal}Dz^{\oplus}+(z^{\ominus})^{\intercal}Dz^{\ominus}}\leq \frac{z^{\intercal}Lz}{z^{\intercal}Dz}\nonumber\] and since \[\min\left\{\frac{A}{C},\frac{B}{D}\right\}\leq \frac{A+B}{C+D}\nonumber\] we have that inequality \eqref{eq:cheeger_zminus_zplus} holds.

We are just left to show that there exists \(S\subseteq \text{supp}(z^{\oplus})\) such that \(\frac{e(S,V-S)}{\deg(S)}\leq \sqrt{2R(z^{\oplus})}\), the proof for \(z^{\ominus}\) being analogous.

Let us rescale \(z^{\oplus}\), let \(z'=\mu z^{\oplus}\) such that \(-1\leq z'_{i}\leq 1\). Let \(t\in (0,1]\) be chosen uniformly at random and let \(S_{t}\mathrel{\mathop:}=\{i:(z'_{i})^{2}\geq t\}\).

Now we analyze the expected values of \(e(S_{t},V-S_{t})\) and \(\deg(S_{t})\). Note that \(S_{t}\subseteq \text{supp}(z^{\oplus})\) by construction and that \(\min\{\deg(S_{t}),\deg(V-S_{t})\}=\deg(S_{t})\) since \(\deg(S_{t})\leq m\) by choice of \(c\). We have that

\begin{equation} \begin{split} \mathbb{E}[e(S_{t},V-S_{t})]&=\sum_{ij\in E}\text{Pr}[ij\in e(S_{t},V-S_{t})]\\ &= \sum_{ij\in E}|(z'_{i})^{2}- (z'_{j})^{2}|\\ &= \sum_{ij\in E}|z'_{i}- z'_{j}||z'_{i}+z'_{j}|\\ &\leq \sqrt{\sum_{ij\in E}(z'_{i}- z'_{j})^{2}}\sqrt{\sum_{ij\in E}(z'_{i}+ z'_{j})^{2}}\qquad\text{by the Cauchy-Schwarz inequality}\\ &\leq \sqrt{\sum_{ij\in E}(z'_{i}- z'_{j})^{2}}\sqrt{2\sum_{i\in V}d_{i}(z'_{i})^{2}}\\ &= \sqrt{R(z')}\sqrt{2}\sum_{i\in V}d_{i}(z'_{i})^{2}\qquad\text{since }R(z')=\frac{\sum_{ij\in E}(z'_{i}-z'_{j})^{2}}{\sum_{i\in V}d_{i}(z'_{i})^{2}}, \end{split}\nonumber \end{equation}and

\begin{equation} \begin{split} \mathbb{E}[\deg(S)]&=\sum_{i\in V}d_{i}\text{Pr}[i\in S_{t}]\\ &=\sum_{i\in V}d_{i}(z'_{i})^{2}. \end{split}\nonumber \end{equation}This implies \[ \frac{\mathbb{E}[e(S_{t},V-S_{t})]}{\mathbb{E}[\deg(S_{t})]}\leq \sqrt{2 R(z')},\nonumber \] which implies that there exists \(t\in (0,1]\) so that \[ \frac{e(S_{t},V-S_{t})}{\deg(S_{t})}\leq \sqrt{2R(z')}.\nonumber \]

4.3 References

This part of the notes closely follows the expositions by Lau (week 2,) and Spielman (2009-lecture 5, 2009-lecture 7).

5 Random walks

We consider the following random walk in a graph \(G=(V,E)\). Suppose we start at a vertex \(u_{0}\in V\) at time \(t=0\). At time \(t=i+1\) we stay at \(u_{i}\) with probability \(1/2\) or we move to a neighbour of \(u_{i}\) with probability \(1/(2d_{u_{i}})\). We call this random walk the "lazy random walk".

In this section we will answer the following two questions related to the lazy random walk.

- Stationary distribution What is the limiting distribution \(\pi\) of this process?

- Mixing time How many steps does it take to reach the limiting distribution?

5.1 An example

Let us begin by considering a simpler type of random walk. Suppose we start at vertex \(u_{0}\in V\) at time \(t=0\). We move from the current vertex \(u_{i}\) to one of its neighbours with probability \(1/d_{u_{i}}\). We denote by \(p^{t}\in\R^{V}\) the probability distribution at time \(t\), that is, \(p^{t}=(p^{t}_{1},\ldots,p^{t}_{n})\) where \(p^{t}_{i}\) denotes the probability of being at vertex \(i\) at time \(t\). For our example we are are assuming that \(p^{0}=e_{u_{0}}\) for some \(u_{0}\in V\) but in general \(p^{0}\) could be any probability distribution on \(V\), that is, \(p^{0}\in\mathbb{R}^{V}\) with \(\sum_{i\in V}p^{0}_{i}=1\).

Note that at step \(t\) the probability of being at vertex \(u\) is given by

\begin{equation} \label{eq:rw_vertex_recurrence} p_{u}^{t}=\sum_{i\in N(u)}p_{i}^{t-1}\frac{1}{d_{i}}. \end{equation}Using \eqref{eq:rw_vertex_recurrence} we can write \(p^{t}\) in terms of the adjacency \left (

\begin{array}{ccc} v1 & v2 \ \end{array}\right ) of \(G\) as follows.

\begin{equation} \nonumber p^{t}=(AD^{-1})p^{t-1}=\ldots=(AD^{-1})^{t}p^{0} \end{equation}We call a distribution \(\pi\) stationary if \(\pi=(AD^{-1})\pi\).

A natural stationary distribution is the one where the probability of being at each vertex is proportional to its degree. In symbols

\begin{equation} \nonumber \pi_{u}=\frac{d_{u}}{\sum_{v\in V}d_{v}}=\frac{d_{u}}{2m}, \end{equation}or using vector notation \(\pi\mathrel{\mathop:}=\frac{1}{2m}d\). This is indeed a stationary distribution, since

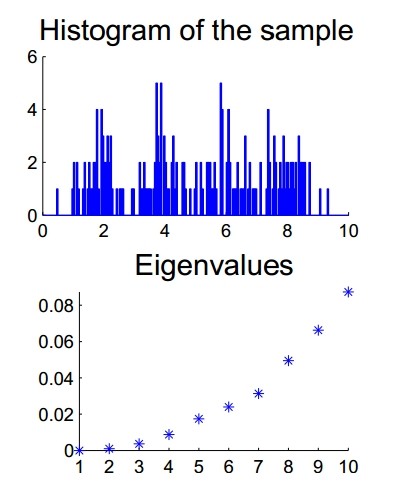

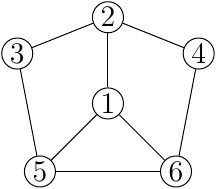

\begin{equation} \nonumber (AD^{-1})\pi=(AD^{-1})(\frac{1}{2m}d)=\frac{1}{2m}A\mathbb{1}=\frac{1}{2m}d=\pi. \end{equation}Let \(G=P_{n}\), the path on \(n\) vertices with \(n\) odd, and let \(m=\frac{n+1}{2}\) be the middle vertex. Let us observe how \(p^{t}\) evolves provided we start at \(m\), that is \(p^{0}=e_{m}\). Note that since \(G\) is bipartite, then the probability of being at an even numbered vertex at an even time is \(0\). Similarly, the probability of being at an odd numbered vertex at an odd time is \(0\). In the animation below this can be observed by looking at how the green colored vertices alternate at each step during the random walk.

Figure 1: A simulation of a random walk in \(P_{7}\).

We can extend the analysis from the example above to any bipartite graph. If we start at a single vertex, then a bipartite graph is an obstruction to reach a stationary distribution, since the probabilities in the partite sets will eventually alternate between being \(0\) and positive.

Another obstruction to reach the stationary distribution \(\pi\) is to start at a single vertex in a disconnected graph, since no vertex at other components can be reached.

In fact, it turns out to be the case that if the graph is connected and non-bipartite then we eventually reach the stationary distribution \(\pi\).

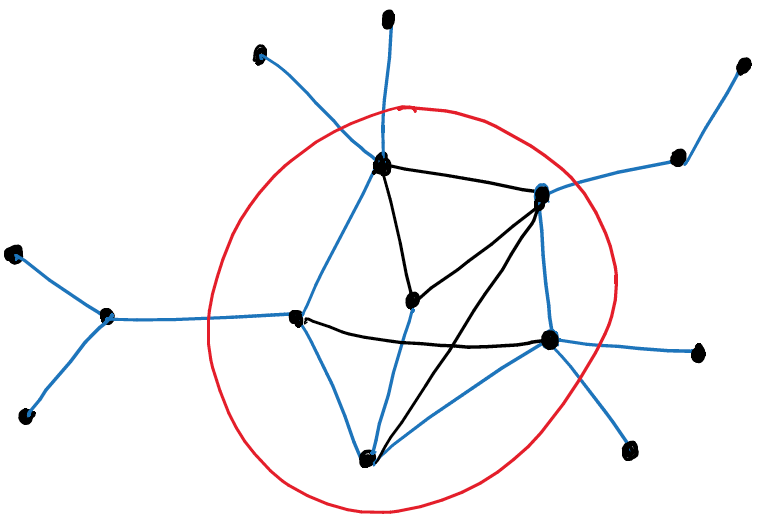

Here we see a random walk in a connected non-bipartite graph. Observe how after 10 steps the changes in the probability distribution become less noticeable.

Figure 2: A simulation of a random walk in the Petersen graph.

5.2 Lazy random walk analysis

We now go back to our original example, namely the lazy random walk. Note that in this case the probability of being at a vertex \(u\) at time \(t\), \(p_{u}^{t}\) is given by

\begin{equation} \nonumber p^{t}_{u}=\frac{1}{2}p_{u}^{t-1}+\frac{1}{2}\sum_{i\in N(u)} \frac{p_{i}^{t-1}}{d_{i}}. \end{equation}We can rewrite this as

\begin{equation} \label{eq:rw_lazy} \begin{split} p^{t}&=\frac{1}{2}p^{t-1}+\frac{1}{2}AD^{-1}p^{t-1}\\ &=\frac{1}{2}(I+AD^{-1})p^{t-1}.\\ \end{split} \end{equation}We call the matrix \(W\mathrel{\mathop:}\frac{1}{2}(I+AD^{-1})p^{t-1}\) the (lazy random) walk matrix. Equation \eqref{eq:rw_lazy} can now be rewritten as \(p^{t}=Wp^{t-1}=\ldots=W^{t}p^{0}\).

Observe that \(W\) is not symmetric, but it is similar to a symmetric matrix, since

\begin{equation} \nonumber \begin{split} D^{-1/2}WD^{1/2}&=D^{-1/2}(\frac{1}{2}I+\frac{1}{2}AD^{-1})D^{1/2}\\ &=\frac{1}{2}I+\frac{1}{2}D^{-1/2}AD^{-1/2}\\ &=\frac{1}{2}I+\frac{1}{2}\hat{A},\\ \end{split} \end{equation}where \(\hat{A}\) is the normalized adjacency matrix. Thus \(W=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})D^{-1/2}\).

Denote the eigenvalues of \(\hat{A}\) by \(1=\alpha_{1}\geq\ldots\geq\alpha_{n}\geq -1\) with associated orthonormal eigenvectors \(v_{1},\ldots,v_{n}\).

Then \(W\) has eigenvalues \(\frac{1}{2}(1+\alpha_{i})\) with associated eigenvectors \(D^{1/2}v_{i}\), \(i=1,\ldots,n\). Indeed, we have

\begin{equation} \nonumber \begin{split} W(D^{1/2}v_{i})&=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})v_{i}\\ &= D^{1/2}(\frac{1}{2}(1+\alpha_{i}))v_{i}\\ &= \frac{1}{2}(1+\alpha_{i}) (D^{1/2}v_{i}). \end{split} \end{equation}Since \(D^{1/2}\) has rank \(n\) it follows that \(D^{1/2}v_{1},\ldots,D^{1/2}v_{n}\) is a basis of eigenvectors corresponding to eigenvalues \(\frac{1}{2}(1+\alpha_{1}),\ldots,\frac{1}{2}(1+\alpha_{n})\).

Let \(\lambda_{i}=\frac{1}{2}(1+\alpha_{i})\), \(i=1,\ldots,n\). Then the eigenvalues of \(W\) are \(1=\lambda_{1}\geq\lambda_{2}\geq\ldots\geq\lambda_{n}\geq0\).

Observe that \(W\pi=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})D^{-1/2}\pi=\frac{1}{2}(\pi+AD^{-1}\pi)=\pi\), so \(\pi\) is an eigenvector of \(W\) associated to eigenvalue \(\lambda_{1}=1\).

At this point we make a mild assumption about \(G\) to prove our main result. We assume that \(G\) is connected and non-bipartite. This implies \(1=\lambda_{1}>\lambda_{2}\), and since \(W\pi=\pi\) we also conclude that any eigenvector associated to \(\lambda_{1}\) must be a scalar multiple of \(\pi\).

Note: Let \(M\) be a matrix with eigenvalues \(1=\lambda_{1}>\lambda_{2}\geq\ldots\geq\lambda_{n}\geq0\) with associated orthonormal eigenvectors \(v_{1},\ldots,v_{n}\). Let \(x\in\mathbb{R}^{n}\) and write \(x=\sum_{i=1}^{n}c_{i}v_{i}\). Then

\begin{equation} \nonumber M^{t}x=M^{t}(\sum_{i=1}^{n}c_{i}v_{i})=\sum_{i=1}^{n}\lambda^{t}_{i}c_{i}v_{i}=c_{1}v_{1}+\sum_{i=2}^{n}\lambda^{t}_{i}c_{i}v_{i}. \end{equation}So \(M^{t}x\xrightarrow{t\to \infty} c_{1}v_{1}\), since \(\lambda_{i}<1\) for \(i=2,\ldots,n\). We will use this idea to show that \(W\) admits a stationary distribution and to provide a bound on the convergence rate. It is important to note that \(W\) may not admit an orthonormal basis of eigenvectors, so a more careful analysis will be required.

Note that if the graph \(G\) is regular, then the walk matrix \(W\) is in fact symmetric and therefore admits an orthonormal basis.

Let \(G=(V,E)\) be a connected non-bipartite graph. Then the lazy random walk in \(G\) admits the unique stationary distribution \(\pi\) and the probability distribution on \(V\) converges to \(\pi\) in \(\Omega(\log n)\) steps.

- Show that the lazy random walk converges to \(\pi\).

- Write \(D^{1/2}p_{0}\) as a linear combination of the orthonormal eigenvectors of \(\hat{A}\), \(v_1,\ldots,v_n\). Deduce that \(W^{t}p_{0}\xrightarrow{t\to\infty}D^{1/2}c_{1}v_{1}\), where \(c_{1}=\langle D^{-1/2}p_{0},v_{1}\rangle\).

- Show that \(D^{1/2}c_{1}v_{1}=\pi\).

- Show that rate of convergence is \(O(\log n)\) by showing that \(\Vert p^{t}-\pi \Vert \leq e^{-t\varepsilon}\sqrt{n}\).

Suppose that we start with the probability distribution \(p_{0}\) at \(t=0\). First we show that the lazy random walk converges to \(\pi\). Recall that \(W=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})D^{-1/2}\), therefore \(W^{t}=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})^{t}D^{-1/2}\).

Write \(D^{-1/2}p_{0}=\sum_{i\in V}c_{i}v_{i}\), where \(c_{i}=\langle D^{-1/2}p_{0},v_{i}\rangle\) is the scalar projection of \(D^{-1/2}p_{0}\) onto \(v_{i}\), \(i\in V\). We have

\begin{equation} \nonumber \begin{split} W^{t}p_{0}&=D^{1/2}(\frac{1}{2}I+\frac{1}{2}\hat{A})^{t} (\sum_{i\in V}c_{i}v_{i})\\ &= D^{1/2}\sum_{i\in V}\left(\frac{1}{2}(1+\alpha_{i})\right)^{t}c_{i}v_{i}\\ &= D^{1/2}\sum_{i\in V}c_{i}\lambda_{i}^{t}v_{i}\\ &= D^{1/2}c_{1}v_{1}+D^{1/2}\sum_{i=2}^{n}\lambda_{i}^{t}c_{i}v_{i}. \end{split} \end{equation}Since \(G\) is connected we have \(\lambda_{2}<1\), so \(W^{t}p_{0}\xrightarrow{t\to\infty}D^{1/2}c_{1}v_{1}\).

We are just left to show that \(\pi=D^{1/2}c_{1}v_{1}\). Recall that \(v_{1}=\frac{d^{1/2}}{\Vert d^{1/2}\Vert}=\frac{d^{1/2}}{\sqrt{2m}}\), therefore \(c_{1}=\langle D^{-1/2}p_{0},\frac{d^{1/2}}{\Vert d^{1/2}\Vert}\rangle=\frac{1}{\sqrt{2m}}p_{0}^{\intercal}D^{-1/2}d^{1/2}=\frac{1}{\sqrt{2m}}p_{0}^{\intercal}\mathbb{1}=\frac{1}{\sqrt{2m}}\). So \(D^{1/2}c_{1}v_{1}=\frac{1}{2m}D^{1/2}d^{1/2}= \frac{1}{2m}d= \pi\).

We now show that the convergence rate is \(O(\log n)\). Suppose \(\lambda_{2}=1-\varepsilon\), or equivalently, \(\alpha_{2}=1-2\varepsilon\). We have \(p^{t}=W^{t}p_{0}=\pi + D^{1/2}\sum_{i=2}^{n}\lambda_{i}^{t}c_{i}v_{i}\).

Therefore

\begin{equation} \nonumber \begin{split} \Vert p^{t}-\pi\Vert &= \Vert D^{1/2}\sum_{i=2}^{n}\lambda_{i}^{t}c_{i}v_{i} \Vert\\ &\leq \Vert (1-\varepsilon)^{t}D^{1/2}\sum_{i=2}^{n}c_{i}v_{i}\Vert\\ &\leq (1-\varepsilon)^{t} \Vert D^{1/2}\Vert\, \Vert \sum_{i\in V}c_{i}v_{i}\Vert \\ &\leq (1-\varepsilon)^{t} (\max_{i\in V}\sqrt{d_{i}})\, \Vert D^{-1/2}p_{0}\Vert\\ &\leq (1-\varepsilon)^{t} \frac{\max_{i\in V}\sqrt{d_{i}}}{\min_{i\in V}\sqrt{d_{i}}}\\ &\leq e^{-t\varepsilon} \max_{i,j\in V}\sqrt{\frac{d_{i}}{d_{j}}}\\ &\leq e^{-t\varepsilon}\sqrt{n}. \end{split} \end{equation}Taking \(t=\Omega(\frac{1}{\varepsilon}(\frac{1}{2}\log n-\log \delta))\) we obtain that \(\Vert p^{t}-\pi\Vert\leq \delta\).

If we wish to obtain a bound for \(\Vert p^{t}-\pi\Vert\) that does not depend on \(n\) or \(\varepsilon\) we may take \(t=\frac{1}{\varepsilon}(\log(n)/2-\log\delta)\) to get an upper bound of \(\delta\).

5.3 Analysis of lazy random walk using conductance

In this section we analyze the convergence of the lazy random walk using conductance via the Lovász-Simonovits Theorem.

Recall that the stationary distribution \(\pi\) assigns to each vertex \(u\) a probability that is proportional to the number of incidences at \(u\), namely \(\frac{d_{u}}{2m}\). Another way of thinking about this is the following. The probability of using edge \(uv\) from time \(i\) to time \(i+1\) is \(\frac{d_{u}}{2m}\frac{1}{d_{u}}+\frac{d_{v}}{2m}\frac{1}{d_{v}}=\frac{1}{m}\). So this probability is distributed uniformly on \(E\).

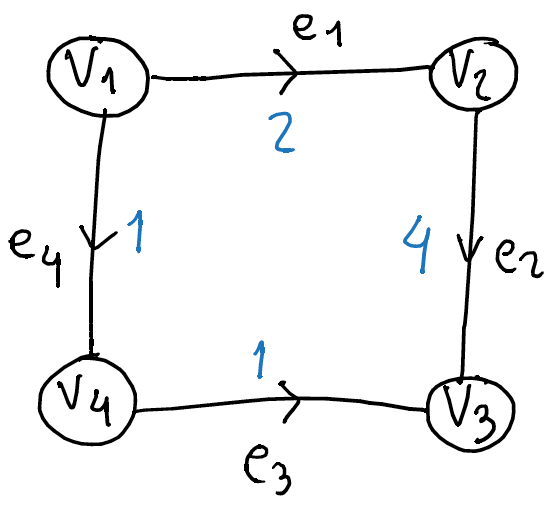

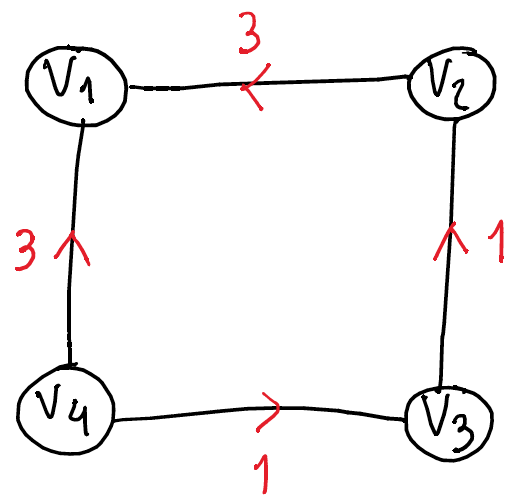

We perform the convergence analysis not on \(G\) but on a digraph \(D\) obtained from \(G\). The digraph \(D\) has the same vertex set as \(G\) and it has arcs \((u,v)\) and \((v,u)\) if and only if \(uv\in E\). We also add \(d_{u}\) loops at vertex \(u\), for each \(u\in V\). In total \(D\) has \(4m\) arcs.

Denote by \(p^{t}\in \mathbb{R}^{n}\) the probability distribution at time \(t\) during the lazy random walk. Let \(q^{t}\) be the probability distribution induced by \(p^{t}\) on the set of arcs, that is \(q^{t}(u,v)=\frac{p^{t}_{u}}{2d_{u}}\).

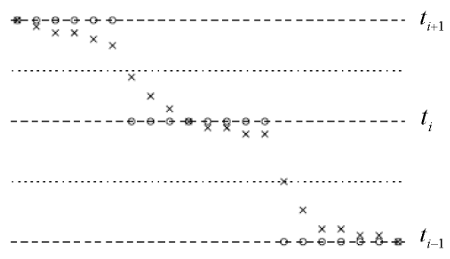

Define \(C^{t}\) to be the cumulative distribution function of \(q^{t}\), and let \(C^{t}(k)\) be the sum of the \(k\) largest values of \(q^{t}\).

For a fixed \(t\), suppose \(V\) is labeled so that \(\frac{p_{1}^{t}}{2d_{1}}\geq \frac{p_{2}^{t}}{2d_{2}}\geq \ldots\geq \frac{p^{t}_{n}}{2d_{n}}\). Given this labeling we have that \(C^{t}(2d_{1})=p_{1}^{t}\), \(C^{t}(2d_{1}+2d_{2})=p_{1}^{t}+p_{2}^{t}\), \(\ldots\), \(C^{t}(2\sum_{i\in [k]}d_{i})=\sum_{i\in[k]}p_{i}^{t}\). We say \(x_{k}\) is a breakpoint if \(x_{k}=\sum_{i\in[k]}2d_{i}\). Note that \(C^{t}\) behaves linearly between \(x_{i}\) and \(x_{i+1}\), so \(C^{t}\) is a piecewise linear function. Since \(C^{t}\) depends on \(t\) (via \(p^{t}\)), we relabel \(V\) for each time so that \(C^{t}\) preserves the properties from above.

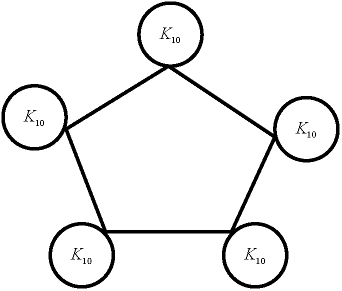

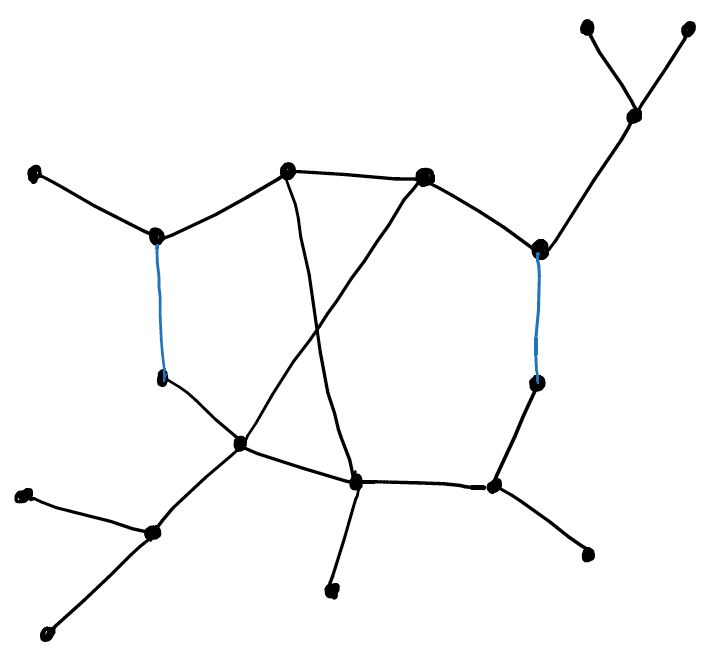

We now present an animation of a random walk on a connected non-bipartite graph on 10 vertices. Observe that after 9 steps the changes in the probability distribution are relatively insignificant. Here it can be observed that as time goes by, the probability at each vertex is proportional to its degree.

Figure 3: A simulation of a random walk.

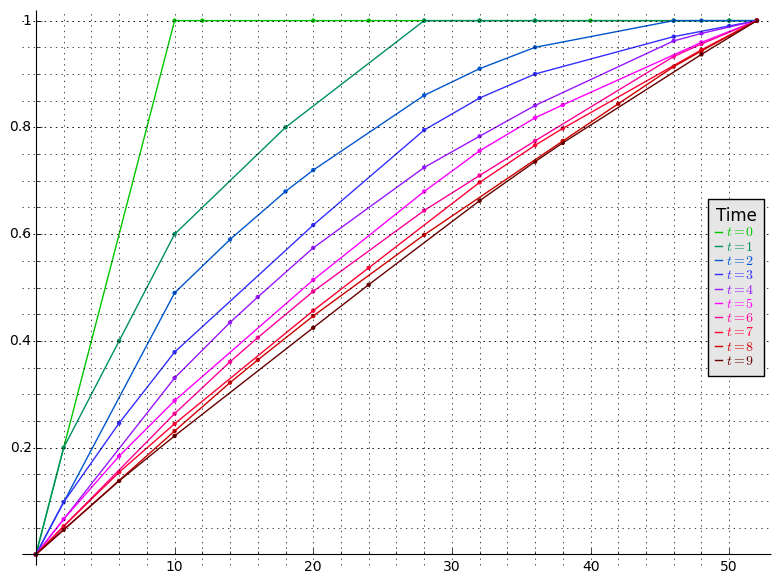

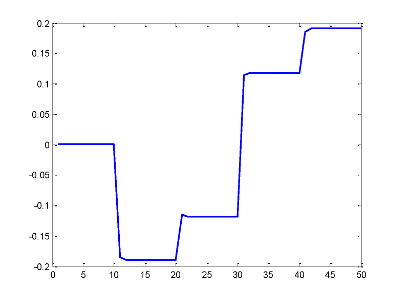

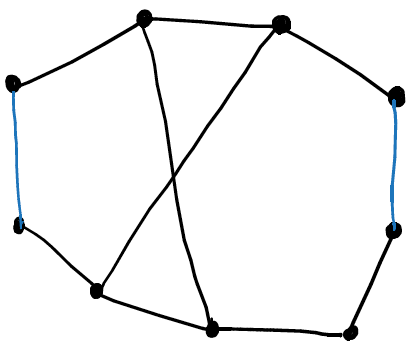

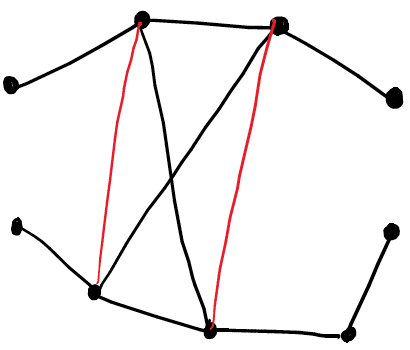

In the plot below, we show how the curve \(C^{t}\) corresponding to the random walk above evolves as time goes by. We can observe that \(C^{t}\) is a piecewise linear concave curve and that as time goes by it approaches a straight line. The main result in this section provides a bound on how fast \(C^{t}\) converges to that straight line.

Figure 4: The function \(C^{t}\) for several values of \(t\).

We begin by showing a property of \(C^{t}\) which is closely related to concavity.

Let \(C^{t}(x)\) be the function defined above for \(x\in[4m]\) and extend it to the interval \([0,4m]\) by making it piecewise linear. Then for any \(x\) with \([x-s,x+s]\subseteq [0,4m]\) and for any \(0\leq r< s\) we have that

\begin{equation} \label{eq:rw2_Ct_property} \frac{1}{2}(C^{t}(x+s)+C^{t}(x-s))\leq\frac{1}{2}(C^{t}(x+r)+C^{t}(x-r)). \end{equation}Note that equation \eqref{eq:rw2_Ct_property} is equivalent to

\begin{equation} \nonumber C^{t}(x+s)+C^{t}(x-s)\leq C^{t}(x+r)+C^{t}(x-r). \end{equation}By the way the vertices are labeled we obtain that larger probabilities come earlier along \([0,4m]\). This implies that variation of values of \(C^{t}\) on an interval of fixed length are greater the closer the interval is to \(0\) and smaller the closer the interval is to \(4m\). Therefore, if \([x-r,x+r]\subseteq [x-s,x+s]\subseteq [0,4m]\) then

\begin{equation} \nonumber C^{t}(x+s)-C^{t}(x+r)\leq C^{t}(x-r)-C^{t}(x-s). \end{equation}Thus \(C^{t}(x+s)+C^{t}(x-s)\leq C^{t}(x+r)+C^{t}(x-r)\), as desired.

Note that taking \(r=0\) in the previous lemma implies that \(C^{t}\) is concave, that is,

\begin{equation} \nonumber C^{t}(x)\geq \frac{C^{t}(x-s)+C^{t}(x+s)}{2}, \end{equation}for all \([x-s,x+s]\subseteq[0,4m]\).

Observe that the closer \(p^{t}\) is to the stationary distribution \(\pi\), the closer the graph of \(C^{t}(x)\) is to being a line, since the probabilities are closer to being distributed equally among arcs of \(D\). Next we show the Lovász-Simonovits theorem which we will use to show that the rate of convergence of the lazy random walk is \(O(\sqrt{m})\), provided that the conductance \(\Phi(G)\) is constant.

Let \(G\) be a connected graph with conductance at least \(\phi\). Then for any probability distribution on \(V\), \(p_{0}\in \mathbb{R}^{n}\), \(t\geq 0\) and \(x\in (0,4m)\) we have that

\begin{equation} \nonumber C^{t}(x)\leq \frac{1}{2}\left( C^{t-1}(x- \phi x)+C^{t-1}(x+ \phi x)\right), \end{equation}if \(x\in (0,2m]\), and

\begin{equation} \nonumber C^{t}(x)\leq \frac{1}{2}\left( C^{t-1}(x- \phi(4m - x))+C^{t-1}(x+ \phi(4m - x))\right), \end{equation}if \(x\in [2m,4m)\).

- Split into two cases, depending on whether \(x\in (0,2m]\) or \(x\in [2m,4m)\). Further restrict \(x\) to be a breakpoint, the proof for arbitrary \(x\) follows from concavity of \(C^{t-1}\).

- Since \(x\) is a breakpoint then \(C^{t}(x)=p^{t}([k])\). Then show that \begin{equation} \nonumber p^t([k])=q_{t-1}(\text{arcs with head and tail in}[k])+q_{t-1}(\text{arcs with head or tail in}[k]) \end{equation}

- Use the property of \(C^{t}\) that for any loopless set of arcs \(A\) we have \(q_{t-1}(A)\leq \frac{1}{2}C^{t-1}(2|A|)\) together with the fact that \(e([k],V-[k])\geq \phi \deg([k])\) to deduce that \begin{equation} \nonumber C^{t}(x)\leq \frac{1}{2}\left( C^{t-1}(x- \phi x)+C^{t-1}(x+ \phi x)\right). \end{equation}

We prove the result for \(x\in (0,2m]\), the result for \(x\in[2m,4m)\) follows by an analogous argument.

First we consider the case where \(x\) is a breakpoint, say \(x=x_{k}=\sum_{i\in[k]}2d_{i}\) and let \(S=[k]\subseteq V\). Therefore \(C^{t}(x)=p^{t}(S)\).

Let \(S^{l}\) be the set of loops within \(S\), that is, \(S^{l}=\{(u,u):u\in S\}\). Denote by \(S^{o}\) the set of arcs whose tail is in \(S\), that is \(S^{o}=\{(u,v):u\in S\}\). Similarly let \(S^{i}\) denote the set of arcs whose head is in \(S\), namely \(S^{i}=\{(u,v):v\in S\}\). Observe that \(S^{o}\cap S^{i}\) is the set of arcs whose head and tail are in \(S\).

We can arrive to \(S\) from time \(t-1\) either by remaining within \(S\) via an arc of \(S^{l}\) , or by incoming to \(S\) through an arc in \(S^{i}\). Therefore

\begin{equation} \nonumber p^{t}(S)=q_{t-1}(S^{l})+q_{t-1}(S^{i}). \end{equation}Since at a given vertex there is a one to one correspondence between outgoing arcs and loops, we have that \(q_{t-1}(S^{l})=q_{t-1}(S^{o})\). Therefore

\begin{equation} \label{eq:qCrelation} \begin{split} p^{t}(S) &= q_{t-1}(S^{o})+q_{t-1}(S^{i})\\ &=q_{t-1}(S^{o}\cap S^{i})+q_{t-1}(S^{o}\cup S^{i}). \end{split} \end{equation}Now, observe that the set of arcs \(S^{o}\cap S^{i}\) contains no loops. Therefore we have

\begin{equation} \nonumber q_{t-1}(S^{o}\cap S_{i})\leq \frac{1}{2}C^{t-1}(2|S^{o}\cap S^{i}|), \end{equation}since for each arc accounted for in \(q_{t-1}(S^{o}\cap S^{i})\) there is an arc and a loop with at least its probability being accounted for in \(C^{t-1}(2|S^{o}\cap S^{i}|)\). Similarly,

\begin{equation} \nonumber q_{t-1}(S^{o}\cup S_{i})\leq \frac{1}{2}C^{t-1}(2|S^{o}\cup S^{i}|). \end{equation}Therefore from \eqref{eq:qCrelation} we derive the following inequality

\begin{equation} \nonumber p^{t}(S) \leq \frac{1}{2}(C^{t-1}(2|S^{o}\cap S^{i}|)+C^{t-1}(2|S^{o}\cup S^{i}|)). \end{equation}By noting that \(|S^{o}\cap S^{i}|=\deg(S)-e(S,V-S)\) and \(|S^{o}\cup S^{i}|=\deg(S)+e(S,V-S)\) we arrive to

\begin{equation} \nonumber p^{t}(S) \leq \frac{1}{2}\left(C^{t-1}(2(\deg(S)-e(S,V-S)))+C^{t-1}(2(\deg(S)+e(V,V-S)))\right). \end{equation}Since we assumed \(x\leq 2m\) we have that \(e(S,V-S)\geq \phi \deg(S)\) and, by the previous lemma, we conclude that

\begin{equation} \nonumber p^{t}(S)\leq \frac{1}{2}\left(C^{t-1}(2(\deg(S)-\phi\deg(S)))+C^{t-1}(2(\deg(S)+\phi\deg(S)))\right). \end{equation}Finally, since \(x_{k}=2\deg(S)\) we have that

\begin{equation} \nonumber p^{t}(S)\leq \frac{1}{2}\left(C^{t-1}(x-\phi x)+C^{t-1}(x+\phi x )\right), \end{equation}as desired. The result for \(x\in (0,2m]\) now follows from concavity of \(C^{t-1}\).

We now use the previous theorem to derive the convergence rate of the lazy random walk in terms of the conductance.

Let \(G\) be a connected graph. Then \(p^{t}(S)-\pi(S)\leq \sqrt{\deg(S)}(1-\phi^{2}/8)^{t}\)

We proceed by showing by induction on \(t\) that

\begin{equation} C^{t}(x)\leq \frac{x}{4m} + \min\{\sqrt{x}, \sqrt{4m-x}\} (1-\phi^{2}/8)^{t}, \quad\text{for all }x\in[0,4m].\label{eq:LSbound} \end{equation}Let us denote by \(U^{t}(x)\) the right hand side of the previous inequality. We prove the aove inequality for \(x\in[0,2m]\), since the argument for \(x\in[2m,4m]\) follows from an analogous argument.

First let us consider the case where \(t=0\). Observe that for \(x\in [1,2m]\) we have that \(C^{0}(x) \leq 1 \leq U^{0}(x)\). Finally for \(x\in [0,1]\) we have that \(C^{0}(x)\leq \sqrt{x}\leq U^{0}(x)\).

Now, let us assume that \eqref{eq:LSbound} holds for all \(t\leq k\). Let us show that \eqref{eq:LSbound} holds for \(t=k+1\). Let us begin by showing that

\begin{equation} \nonumber \frac{1}{2}(U^{t-1}(x-\phi x )+U^{t-1}(x+\phi x))\leq U^{t}(x). \end{equation}We have that

\begin{equation} \label{eq:LSboundU} \begin{split} \frac{1}{2}(U^{t-1}(x-\phi x )+U^{t-1}(x+\phi x)) &= \frac{x}{4m}+\frac{1}{2}\left( \sqrt{x-\phi x} + \sqrt{x + \phi x}\right)(1-\phi^{2}/8)^{t}\\ &= \frac{x}{4m}+\frac{\sqrt{x}}{2}\left( \sqrt{1-\phi} + \sqrt{1 + \phi} \right)(1-\phi^{2}/8)^{t}. \end{split} \end{equation}Considering the Taylor series of \(\sqrt{1+\phi}\) at \(\phi=0\) we have

\begin{equation} \nonumber \begin{split} \sqrt{1+\phi} &= 1+ \frac{1}{2}\phi - \frac{1}{8}\phi^{2} + \frac{1}{16} \phi^{3} - \frac{5}{128}\phi^{4}\\ &\leq 1+ \frac{1}{2}\phi - \frac{1}{8}\phi^{2}. \end{split} \end{equation}Similarly, for \(\sqrt{1-\phi}\) we obtain

\begin{equation} \nonumber \begin{split} \sqrt{1-\phi} &= 1- \frac{1}{2}\phi - \frac{1}{8}\phi^{2} - \frac{1}{16} \phi^{3} - \frac{5}{128}\phi^{4}\\ &\leq 1- \frac{1}{2}\phi - \frac{1}{8}\phi^{2}. \end{split} \end{equation}Considering the previous two inequalities we obtain the following upper bound for \eqref{eq:LSboundU}.

\begin{equation} \nonumber \begin{split} \frac{1}{2}(U^{t-1}(x-\phi x )+U^{t-1}(x+\phi x)) &\leq \frac{x}{4m} +\sqrt{x} ( 1 - \phi^{2}/8 )(1-\phi^{2}/8)^{t}.\\ &= U^{t}(x). \end{split} \end{equation}Thus, the above inequality together with the previous theorem imply that \(C^{t}(x)\leq U^{t}(x)\), as desired.

To derive the result we note that if \(S\subseteq V\) we have that

\begin{equation} \nonumber \begin{split} p^{t}(S)-\pi(S) &\leq C^{t}\left( |S| \right) - \frac{\sum_{i\in S}d_{i}}{2m} \\ &\leq \frac{\sum_{i\in S}d_{i}}{2m} + \sqrt{\sum_{i\in S}d_{i}}(1-\phi^{2}/8)^{t} - \frac{\sum_{i\in S}d_{i}}{2m}\\ &= \sqrt{\deg(S)}(1-\phi^{2}/8)^{t} \end{split} \end{equation}5.4 The Lovasz Simonovits theorem

The Lovasz Simonovits theorem is a result that is interesting by itself. In this section we provide an alternate proof of it.

We use the following notation in this section.

- \(G=(V,E)\) denotes a connected graph.

- \(W\) is the walk matrix of the lazy random walk, explicitly,

\begin{equation}

\nonumber

W=\frac{1}{2}(I+AD^{-1}).

\end{equation}

Note that \(W_{i,i}=1/2\) and that the column sum is \(1\).

It should be noted that the Lovasz Simonovits theorem is more

- \(p^{0}\in \mathbb{R}^{V}\) is a probability distribution on \(V\). The

vector \(p^{t}\) denotes the probability distribution after \(t\) steps

of the lazy random walk, recall that

\begin{equation}

\nonumber

p^{t}=Wp^{t-1}

\end{equation}

for \(t\geq 1\).

- We denote the stationary probability distribution by

\(\pi=(\pi_{1},\ldots,\pi_{n})\). Recall that this probability

distribution satisfies \(W\pi=\pi\).

- Observe that since \(W\pi=\pi\), we have that \(\sum_{j\in V}w_{i,j}\pi_{j}=\pi_{i}\). Also, since the sum of the entries of a column of \(W\) is \(1\), then \(\sum_{j\in V} w_{j,i} \pi_{i} = \pi_{i}\). Therefore \(\sum_{j\in V}w_{i,j}\pi_{j} = \sum_{j\in V} w_{j,i} \pi_{i}\).

- \(\gamma\in \mathbb{R}^{n}\) denotes the sum of the first \(k\) rows of \(W\), namely, \(\gamma = (\underbrace{1,\ldots,1}_{k\text{ ones}},0,\ldots,0)W\). Note that \(\gamma_{i}\geq 1/2\) for \(i\leq k\), and \(\gamma_{i}\leq 1/2\) for \(i\geq k\) by the structure of \(W\).

- \(F=W\Pi\), where \(\Pi=\text{diag}(\pi_{1},\ldots,\pi_{n})\). Note that

\(F\) is symmetric, since for \(ij\in E\) we have

\begin{equation}

\nonumber

f_{i,j}=W_{i,j}\pi_{j}=\frac{1}{2d_{j}}\frac{d_{j}}{2m}=\frac{1}{4m}

\end{equation}

and

\begin{equation} \nonumber f_{j,i}=W_{j,i}\pi_{i}=\frac{1}{2d_{i}}\frac{d_{i}}{2m}=\frac{1}{4m}. \end{equation}If \(ij\not\in E\) then we have \(f_{i,j}=0=f_{j,i}\).

In fact \(F\) is symmetric since \(F=W\Pi=\frac{1}{2}(I+AD^{-1})\Pi=\frac{1}{2}(\Pi + A D^{-1}\Pi) = \frac{1}{2}(\Pi + \frac{1}{2m}A)\).

By the symmetry of \(F\) it follows that for any \(S\subseteq V\) we have

\begin{equation} \nonumber \sum_{j\in V-S}(\sum_{j\in S}f_{i,j})=\sum_{i\in S}(\sum_{h\in V-S}f_{h,i}). \end{equation} - Here we define the function \(d^{t}:[0,1]\rightarrow \mathbb{R}\) which

is part of the statement of the Lovasz Simonovits theorem. We proceed

by defining two functions, \(d_{1}^{t}\) and \(d_{2}^{t}\), and showing

that they are equal, we then call this function \(d^{t}\).

Let us begin by defining \(d_{1}^{t}:[0,1]\rightarrow \mathbb{R}\). This function is defined in terms of the knapsack linear program as follows.

\begin{equation} \nonumber \begin{array}{r@{}l@{}l} d_{1}^{t}(x)= &{} \text{max} \quad &{}\sum_{i\in V}(p_{i}^{t}-\pi_{i})w_{i} \\ &{}\text{s.t.}\quad &{}\sum_{i\in V}\pi_{i}w_{i} =x \\ &{} &{} 0\leq w_{i}\leq 1, \quad i\in V. \end{array} \end{equation}Now, let us make an assumption for the definition of \(d_{2}^{t}\). Let us assume that the elements of \(V\) are labelled so that

\begin{equation} \nonumber \frac{p_{1}^{t}}{\pi_{1}} \geq \frac{p_{2}^{t}}{\pi_{2}} \geq \dots \geq \frac{p_{n}^{t}}{\pi_{n}}, \end{equation}and, assuming the ordering above, let \(\pi[k] = \sum_{i\in[k]}\pi_{i}\).

We define \(d_{1}^{t}:[0,1]\rightarrow \mathbb{R}\) as follows.

\begin{equation} \nonumber d_{2}^{t}(x)=(p_{1}-\pi_{1})+\dots+(p_{k}-\pi_{k})+\frac{x-\pi[k]}{\pi_{k+1}}(p_{k+1}-\pi_{k+1}), \end{equation}where \(k\) is such that \(\pi[k]\leq x \leq \pi[k+1]\).

We claim that \(d_{1}^{t}=d_{2}^{t}\). This follows since the optimal solution to the knapsack linear program is given by ordering the items by decreasing \(\frac{\text{profit}}{\text{size}}\) ratios and then picking as many items as to fill the knapsack.

Note that we can think of \(d^{t}\) as the function that describes how the optimal value of the knapsack linear program changes as we increase the size of the knapsack.

- Finally, let us recall the notion of conductance of a set. We define

the conductance of \(S\) as

\begin{equation}

\nonumber

\phi_{S} = \frac{ \sum_{j\in S}( \sum_{i\in V-S} w_{i,j} ) \pi_{j} }{

\min\{ \pi(S), \pi(V-S) \}} = \frac{ \sum_{j\in S}( \sum_{i\in V-S}

f_{i,j} ) }{\min\{ \pi(S), \pi(V-S) \}}.

\end{equation}

The conductance of a graph \(G\) being defined as \(\Phi=\min_{S\subseteq V}\phi_{S}\).

We are now ready to state the Lovasz Simonovits theorem.

Let \(G=(V,E)\) be a connected graph and let \(p^{0}\) be a probability distribution on \(V\). If \(d^{t}\) is defined as above then we have the following. If \(x\in[0,1/2]\) then

\begin{equation} d^{t}(x)\leq \frac{1}{2}(d^{t-1}(x-2\Phi x)+d^{t-1}(x+2\Phi x)). \label{eq:LS1} \end{equation}If \(x\in[1/2,1]\) then

\begin{equation} \nonumber d^{t}(x)\leq \frac{1}{2}(d^{t-1}(x-2\Phi (1-x))+d^{t-1}(x+2\Phi (1-x))). \end{equation}We prove the result for the case where \(x\in [0,1/2]\), the other case follows from an analogous argument. First we show that \eqref{eq:LS1} holds for the case where \(x=\pi[k]\).

If \(x=\pi[k]\) then

\begin{equation} \label{eq:gamma} \begin{split} d^{t}(x)&=\sum_{i\in k}p_{i}-\pi_{i}\\ &= (\underbrace{1,\dots,1}_{k\text{ ones}},0,\dots,0)(p^{t}-\pi)\\ &= (\underbrace{1,\dots,1}_{k\text{ ones}},0,\dots,0)(Wp^{t-1}-W\pi)\\ &= (\underbrace{1,\dots,1}_{k\text{ ones}},0,\dots,0)W(p^{t-1}-\pi)\\ &= \gamma^{\intercal}(p^{t-1}-\pi), \end{split} \end{equation}where \(\gamma\) is the sum of the first \(k\) rows of \(W\).

Let us write \(\gamma=\frac{\gamma'+\gamma''}{2}\), where \(\gamma',\gamma''\in [0,1]^{n}\). We take

\begin{equation} \gamma'_{j}= \begin{cases} 2\gamma_{j}-1 &\text{if }j\leq k\\ 0 &\text{if }j>k \end{cases},\qquad\text{ and }\qquad \gamma''_{j}= \begin{cases} 1 &\text{if }j\leq k\\ 2\gamma_{j} &\text{if }j>k \end{cases}. \end{equation}Define \(x'=(\gamma')^{\intercal}\pi\) and \(x''=(\gamma'')^{\intercal}\pi\). We then have that

\begin{equation} \label{eq:LSstep1} \begin{split} d^{t}(x)&= \gamma^{\intercal}(p^{t-1}-\pi)\\ &= \left(\frac{\gamma'+\gamma''}{2}\right)^{\intercal}(p^{t-1}-\pi)\\ &= \frac{(\gamma')^{\intercal}(p^{t-1}-\pi)+(\gamma'')^{\intercal}(p^{t-1}-\pi)}{2}. \end{split} \end{equation}Observe that \((\gamma')^{\intercal}(p^{t-1}-\pi)=x'\) so \(\gamma'\) is a feasible solution to the knapsack linear program where the knapsack has capacity \(x'\), thus \((\gamma')^{\intercal} (p^{t-1}-\pi) \leq d^{t-1}(x')\). Similarly we have \((\gamma'')^{\intercal} (p^{t-1}-\pi) \leq d^{t-1}(x'')\). Thus, from \eqref{eq:LSstep1} we get

\begin{equation} \label{eq:LSstep2} d^{t}(x)\leq \frac{d^{t-1}(x') +d^{t-1}(x'')}{2}. \end{equation}We claim that $x-x'≥ 2Φ x.$ Let us write the left hand side explicitly.

\begin{equation} \begin{split} x-x'&=\sum_{j\in V} \gamma_{j} \pi_{j} - \sum_{j\in V} \gamma_{j}' \pi_{j}\\ &=\sum_{j\leq k} (1-\gamma_{j}) \pi_{j} + \sum_{j> k} \gamma_{j} \pi_{j}\\ &=\sum_{j\leq k} \left(\sum_{i>k}w_{i,j} \right) \pi_{j} + \sum_{j> k} \left(\sum_{i\leq k}w_{i,j} \right)\pi_{j}\\ &=\sum_{j\leq k} \left(\sum_{i>k}f_{i,j} \right) + \sum_{j> k} \left(\sum_{i\leq k}f_{i,j} \right)\\ &= 2\sum_{j\leq k} \left(\sum_{i>k}f_{i,j} \right)\qquad\\ &= 2\phi_{[k]} \pi[k]. \end{split} \end{equation}And clearly the right hand side is at least \(2\Phi x\), thus the claim holds. An analogous argument shows that \(x''-x\geq 2\Phi x\).

5.5 References

This part of the notes closely follows the expositions by Lau (week 6) and Spielman (2009-lecture 8).

6 Electric networks

We use the following notation in this section

- \(G=(V,E)\) an undirected graph

- We think of each edge \(e\in E\) as a resistor with resistance \(r_{e}\). We denote the conductance of \(e\) by \(w_{e}=\frac{1}{r_{e}}\). In this same context, we call \(\sum_{v\in N(u)}w_{uv}\) the weighted degree of \(u\) and we denote it by \(d_{u}\).

- We define the weighted Laplacian \(L_{G}\) as the matrix having the \(u,v\) entry equal to \(-w_{uv}\) if \(u\neq v\) and \(d_{u}\) is \(u=v\).

- The current flowing from \(u\) to \(v\) is denoted by \(f_{u,v}\). This is a directed quantity, so \(f_{u,v}=-f_{v,u}\).

- We use \(\phi_{u}\) to denote the potential of vertex \(u\). Thus \(\phi\in\mathbb{R}^{V}\) denotes the potential of the set of vertices.

- For \(u\in V\) we define \(\chi_{u}\in \mathbb{R}^{V}\) to be the \(u\)-th standard basis vector, or the vector whose entries are all zero except entry \(u\) which is 1.

- Let \(B\) be the \(m\times n\) matrix whose rows are indexed by

edges and columns are indexed by vertices. We have that row \(uv\in

E\) of \(B\) is \(\chi_{u}-\chi_{v}\) if \(u

- Using the same indexing for the rows as above, we define the \(m\times m\) matrix \(R\) as the diagonal matrix having the conductance of the edges along the diagonal, that is, \(r_{e,e}=r_{e}\). We also let \(W=R^{-1}\).

Ohm's law (or the potential flow law) states that the potential drop across a resistor is the current flowing times the resistance, or equivalently

\begin{equation} \label{eq:ohm} f_{u,v}r_{uv}=\phi_{u}-\phi_{b} \quad\text{ or }\quad f_{u,v}=w_{uv}(\phi_{u}-\phi_{v}). \end{equation}Kirchoff's law (or the flow conservation law): The sum of the currents entering a vertex \(u\) is equal to the currents leaving \(u\), or

\begin{equation} \label{eq:kirchoff} \sum_{v\in N(u)}f_{u,v}=f_{u}, \end{equation}where \(\extCur_{u}\) denotes the external current supplied or extracted at \(u\). If current is being supplied to \(u\) then \(\extCur_{a}>0\), \(\extCur_{u}<0\) if current is being extracted from \(u\), and \(\extCur_{u}=0\) otherwise. So \(\extCur\in\mathbb{R}^{V}\).

Observe that

\begin{equation} \nonumber \begin{split} \sum_{v\in N(u)}f_{u,v} &= \sum_{v\in N(u)}w_{uv}(\phi_{u}-\phi_{v})\\ &= \sum_{v\in N(u)}w_{uv}\phi_{u}-\sum_{v\in N(u)} w_{uv}\phi_{v}\\ &= \phi_{u} d_{u} - \sum_{v\in N(u)} w_{uv}\phi_{v}. \end{split} \end{equation}Therefore \(\phi_{u} d_{u} - \sum_{v\in N(u)} w_{uv}\phi_{v} = \extCur_{u}\) for all \(u\in V\). This can be rewritten as \(L_{G}\phi = \extCur\). Therefore \(\phi = L_{G}^{-1}\extCur\). However \(L_{G}\) does not have full rank, as \(\mathbb{1}\in \ker(L_{G})\). Thus, if \(L_{G}^{+}\) denotes the pseudo-inverse of \(L_{G}\) we may compute \(\phi\) from \(\extCur\) provided \(\extCur\perp\mathbb{1}\), and in this case we have that \(\phi = L_{G}^{+}\extCur\). In general, for any \(\extCur\in \mathbb{R}^{V}\) we have that \(\phi\in\{L_{G}^{+}\extCur + \alpha\mathbb{1}:\alpha\in\mathbb{R}\}\).

Now, given the vector of potentials, we may compute the currents at each edge and the external currents at each vertex. For the currents at the edges we do this in terms of the matrices \(B\) and \(W\) defined above. We have that \(f=WB\phi\). Now, for the external currents we obtain that \(B^{t}f=\extCur\).

6.1 Effective resistance

The effective resistance between \(u\) and \(v\), \(\effResist_{u,v}\), is defined to be \(\phi_{u}-\phi_{v}\), where \(\phi\in\mathbb{R}^{V}\) is the resulting vector of potentials when one unit of current is supplied to \(u\) and one unit of current is removed from \(v\), that is, \(\extCur_{u}=1\) and \(\extCur_{v}=-1\). Intuitively we may thing of \(\effResist_{u,v}\) as the resistance between \(u\) and \(v\) given by the rest of electrical network. Algebraically we have \(L_{G}\phi=\extCur\). Now, since in this case \(\extCur=\chi_{u}-\chi_{v}\), then \(\extCur\perp\mathbb{1}\). Therefore \(\phi=L_{G}^{+ }\extCur\). As a consequence we obtain that \(\effResist_{u,v}=(\chi_{u}-\chi_{v})^{\intercal}L_{G}^{+}(\chi_{u}-\chi_{v})\).

6.2 Energy

Joule's first law asserts that the energy dissipated per unit of time at a resistor \(ab\) is given by \(f_{a,b}^{2}r_{ab}\). We define the energy dissipated in the network with currents \(f\in \mathbb{R}^E\) as the sum of all the energy dissipated at the resistors of the network, that is,

\begin{equation} \begin{split} \mathcal{E}(f)&=f^{\intercal}R f \\ &= \sum_{uv\in E}f_{u,v}^{2}r_{uv}\\ &= \sum_{uv\in E}f_{u,v}(\phi_{u}-\phi_{v})\\ &= \sum_{uv\in E}w_{ab}(\phi_{u}-\phi_{v})^{2}\\ &= \phi^{\intercal}L_{G}\phi. \end{split} \end{equation}Note that when one unit of current is supplied to \(u\) and one unit is removed from \(v\) then we have \(\effResist_{u,v}=\mathcal{E}(f)\).

Let \(g\in\mathbb{R}^{E}\) be a current assignment on \(E\) that satisfies Kirchoff's flow conservation law The next theorem, known as Thompson's Principle, shows that among all such current assignments the one minimizing the energy dissipated in the network is precisely \(f=\chi_{u}-\chi_{v}\).

For any resistor network and any two vertices \(u\) and \(v\) we have that

\begin{equation} \nonumber \effResist_{u,v}\leq \mathcal{E}(g), \end{equation}where \(g\) is defined as above.

Let \(f\) be the the flow where one unit of flow is supplied to \(u\) and one unit of flow is removed from \(v\), that is, \(f=\chi_{u}-\chi_{v}\).

Observe that \(B^{\intercal}f=\chi_{u}-\chi_{v}\) since the entry corresponding to \(x\) is given by \(\sum_{y\in N(x)}f_{x,y}=\extCur_{x}\). Similarly \(B^{\intercal}g=\chi_{u}-\chi_{v}\).

Letting \(c=g-f\) we obtain \(B^{\intercal}c=0\), this \(\sum_{y\in N(x)}c_{x,y}=0\) for all \(x\in V\). Therefore

\begin{equation} \begin{split} \mathcal{E}(g) &= \sum_{uv\in E}g_{u,v}^{2}r_{uv}\\ &= \sum_{uv\in E}(f_{u,v}+c_{u,v})^{2}r_{uv}\\ &= \sum_{uv\in E}f_{u,v}^{2}r_{uv} + 2\sum_{uv\in E}f_{u,v}c_{u,v} r_{uv}+ \sum_{uv\in E}c_{u,v}^{2}r_{uv}. \end{split} \end{equation}Note that \(\sum_{uv\in E}c_{u,v}^{2}r_{uv}\geq 0\) and by Ohm's law we have that

\begin{equation} \begin{split} \sum_{uv\in E}f_{u,v}c_{u,v} r_{uv} &= \sum_{uv\in E}(\phi_{u}-\phi_{v})c_{u,v}\\ &= \sum_{uv\in E}(\phi_{u}c_{u,v}-\phi_{v}c_{u,v})\\ &= \sum_{u\in V }\phi_{u}\sum_{uv\in E}c_{u,v}=0. \end{split} \end{equation}Therefore \(\mathcal{E}(g)\geq\mathcal{E}(f)=\effResist_{u,v}\), as desired.

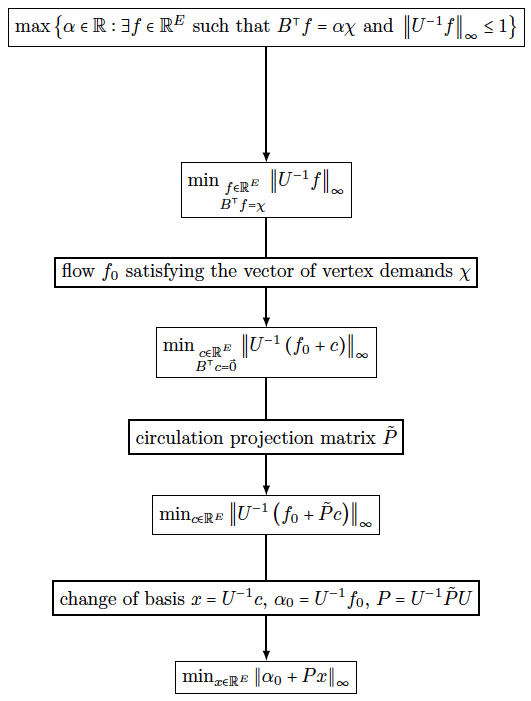

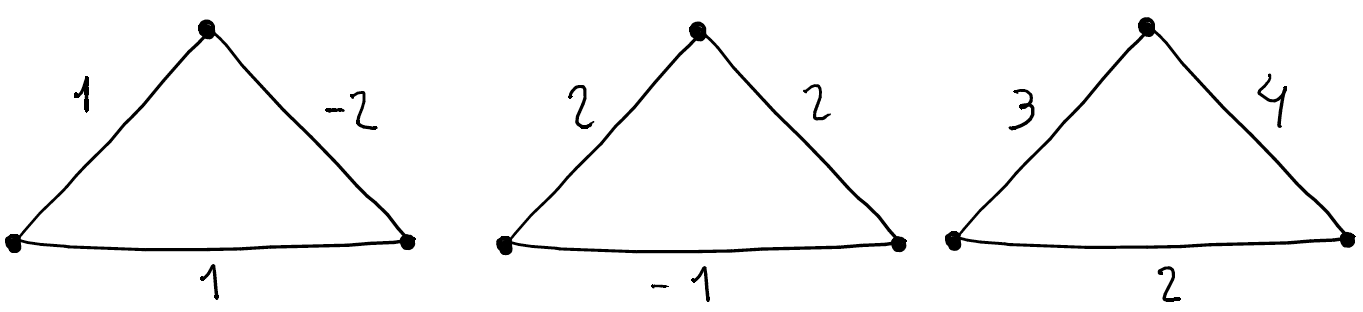

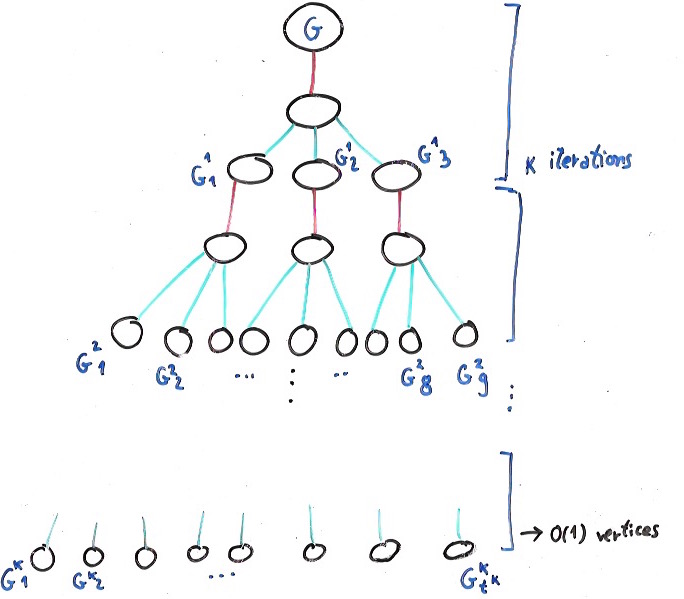

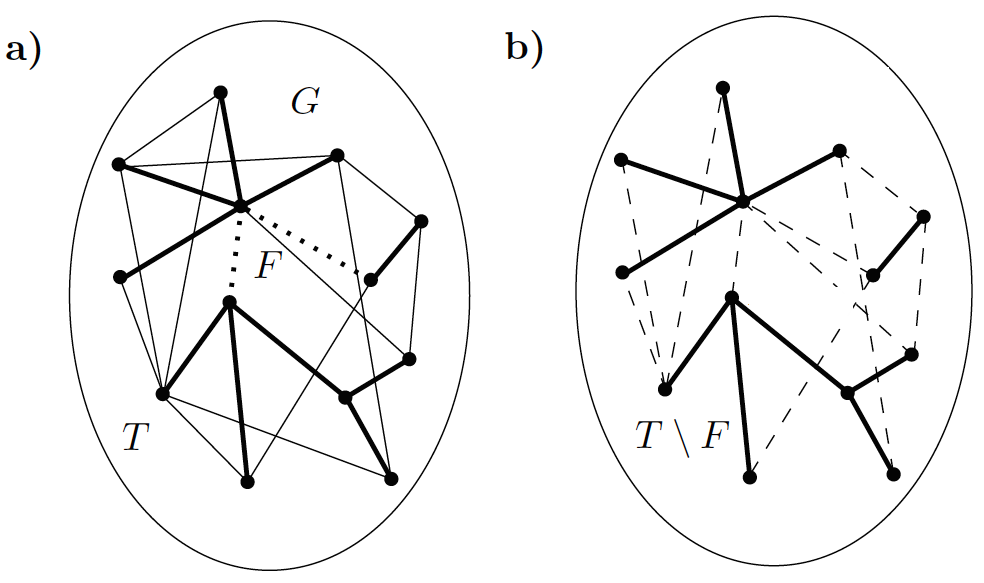

6.3 Effective Resistance as Distance